This is a presentation that I gave on Wednesday the 2nd of December 2020 at the AeRO (Australian eResearch Organizations) council meeting at the request of the chair Dr Carina Kemp).

Carina asked:

It would be really interesting to find out what is happening in the research data management space. And I’m not sure if it is too early, but maybe touch on what is happening in the EOSC Science Mesh Project.

The Audience of the AeRO Council is AeRo member reps from AAF, AARNet, QCIF, CAUDIT, CSIRO, GA, TPAC, The uni of Auckland, REANNZ, ADSEI, Curtin, UNSW, APO.

At this stage I was still the eResearch Support Manager at UTS - but I only had a couple of weeks left in that role.

In this presentation I’m going to start from a naive IT perspective about research data.

I would like to acknowledge and pay my respects to the Gundungurra and Darug people who are the traditional custodians of the land on which I live and work.

Research data is special - like snowflakes - and I don’t mean that in a mean way, Research Data could be anything - any shape any size and researchers are also special, not always 100% aligned with institutional priorities, they align with their disciplines and departments and research teams.

It’s obvious that buying storage doesn’t mean you’re doing data management well but that doesn’t mean it’s not worth restating.

So "data storage is not data management". In fact, the opposite might be true - think about buying a laptop - do you just get one that fits all your stuff and rely on getting a bigger one every few years? Or do you get a smaller main drive and learn how to make sure that your data's actually archived somewhere? That would be managing data.

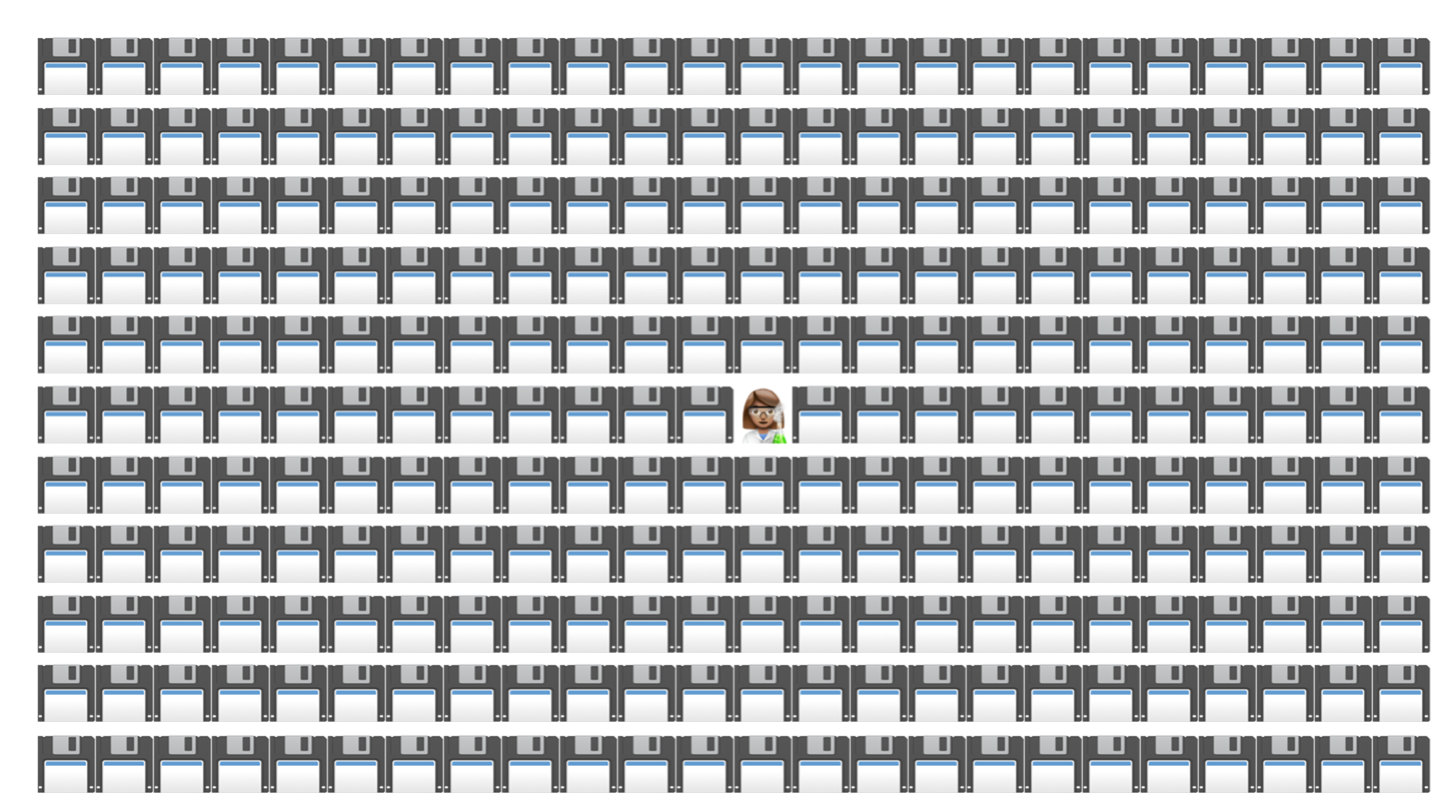

And remember that not all research data is the same “shape” as corporate data - it does not all come in database or tabular form - it can be images, video, text, with all kinds of structures.

There are several reasons we don’t want to just dole-out storage as needed.

- It’s going to cost a lot of money and keep costing a lot of money

- Not everything is stored in “central storage” anyway. There are share-sync services like AARNet’s Cloudstor.

- Just keeping things doesn’t mean we can find them again

So far we’ve just looked at things from an infrastructure perspective but that’s not actually why we’re here, us with jobs in eResearch. I think we’re here to help researchers do excellent research with integrity, AND we need to help our institutions and researchers manage risk.

-

The Australian Code for the Responsible Conduct of Research which all research organizations need to adhere to if we get ARC or NHMRC grants sets out some institutional responsibilities to provide infrastructure and training

-

There are risks associated with research data, reputational, financial and risks to individuals and communities about whom we hold data

At UTS, we’ve embraced the Research Data Management Plan - as a way to assist in dealing with this risk. RDMPs have a mixed reputation here in Australia - some organizations have decided to keep them minimal and as streamlined as possible but at UTS the thinking is that they can be useful in addressing a lot of the issues raised so far.

-

Where’s the data for project X - when there’s an integrity investigation. Were procedures followed?

-

How much storage are we going to need?

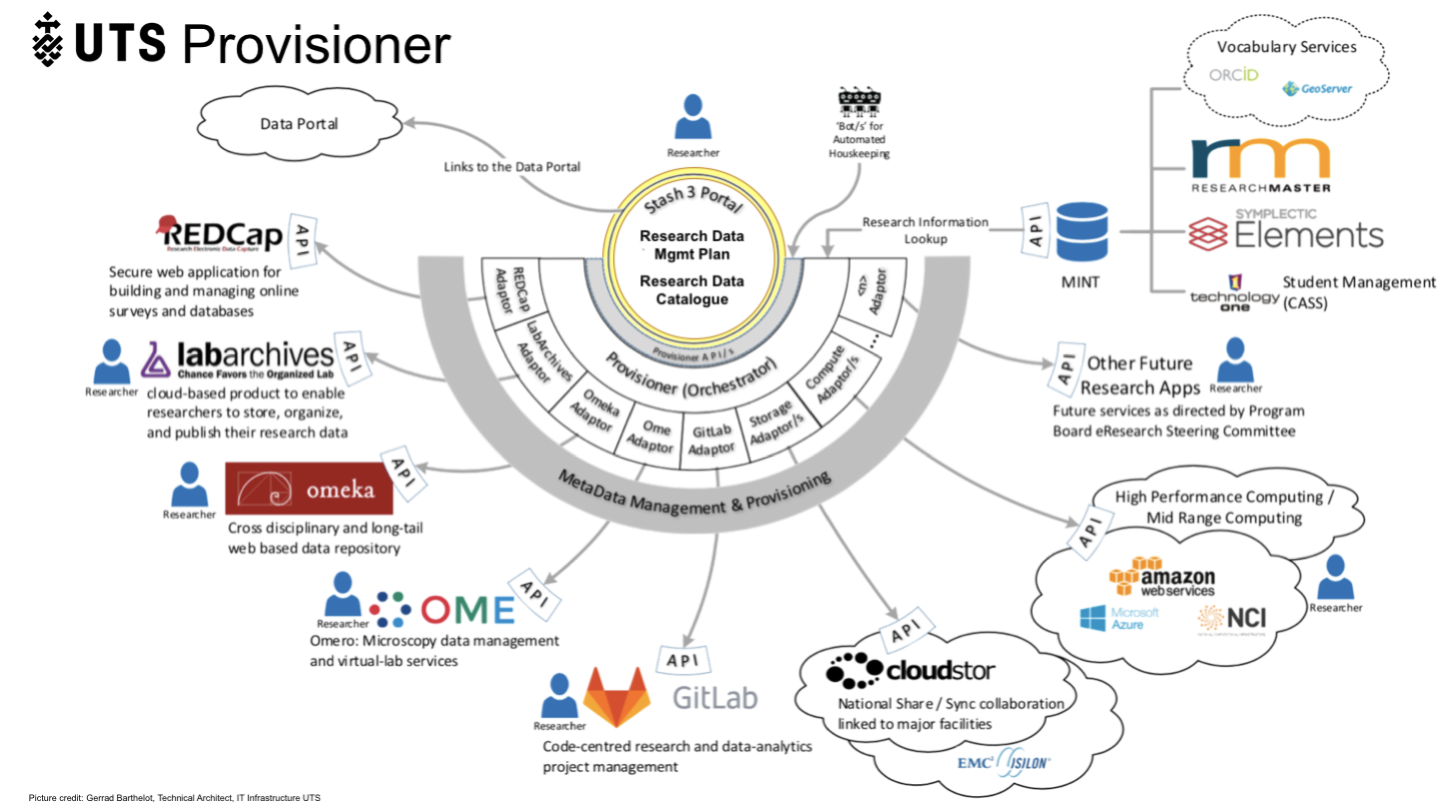

Inspired by the (defunct?) Research Data Lifecycle project that was conceived by the former organizations that became the Australian Research Data Commons (ANDS, NeCTAR and RDSI) we came up with this architecture for a central research data management system (in our case we use the open source ReDBox system) loosely linked to a variety of research Workspaces, as we call them.

The plan is that over time, researchers can plan and budget for data management in the short, medium and long term, provision services and use the system to archive data as they go.

(Diagram by Gerard Barthelot at UTS)

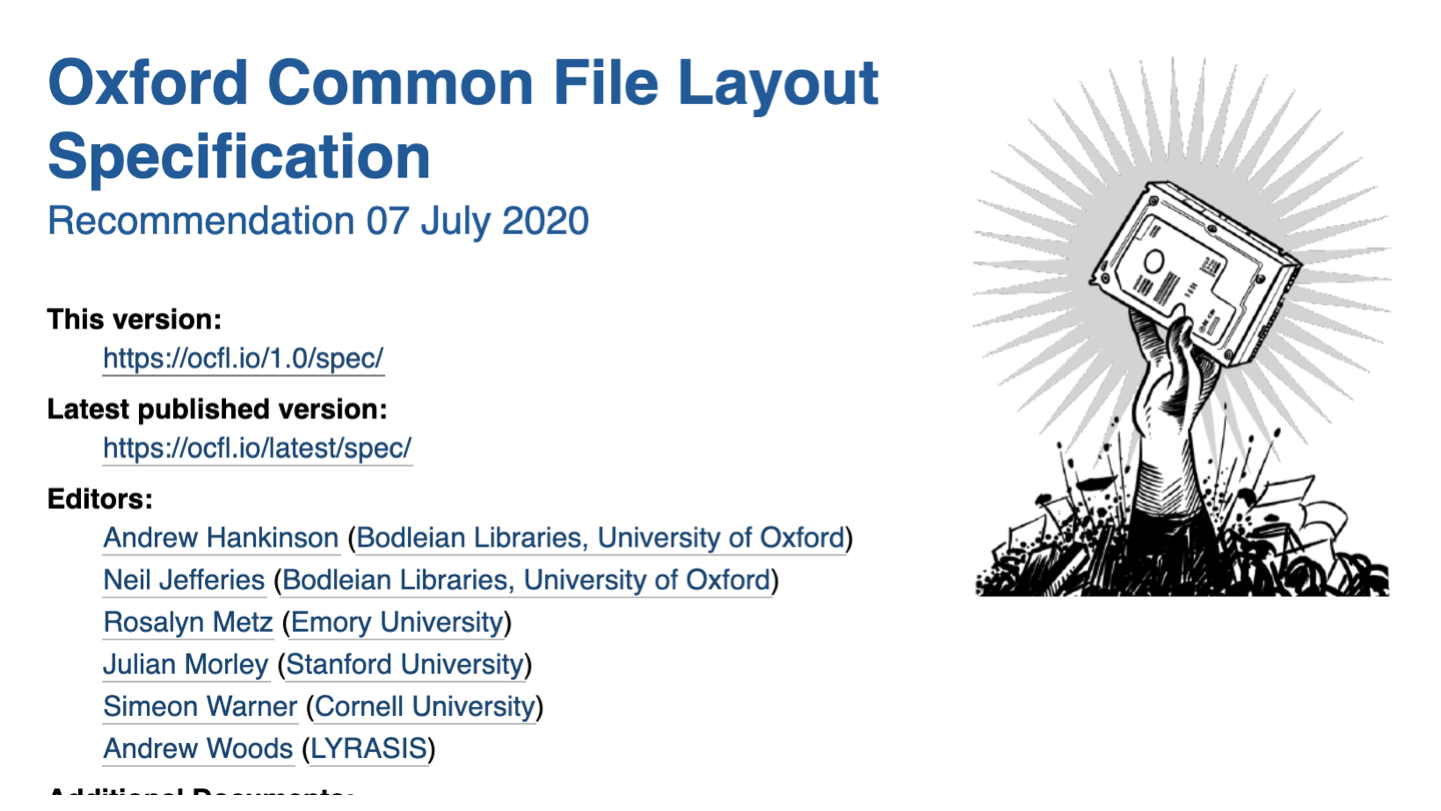

UTS has been an early adopter of the OCFL (Oxford Common File Layout) specificiation - a way of storing file sustainably on a file system (coming soon: s3 cloud storage) so it does not need to be migrated. I presented on this at the Open Repositories conference

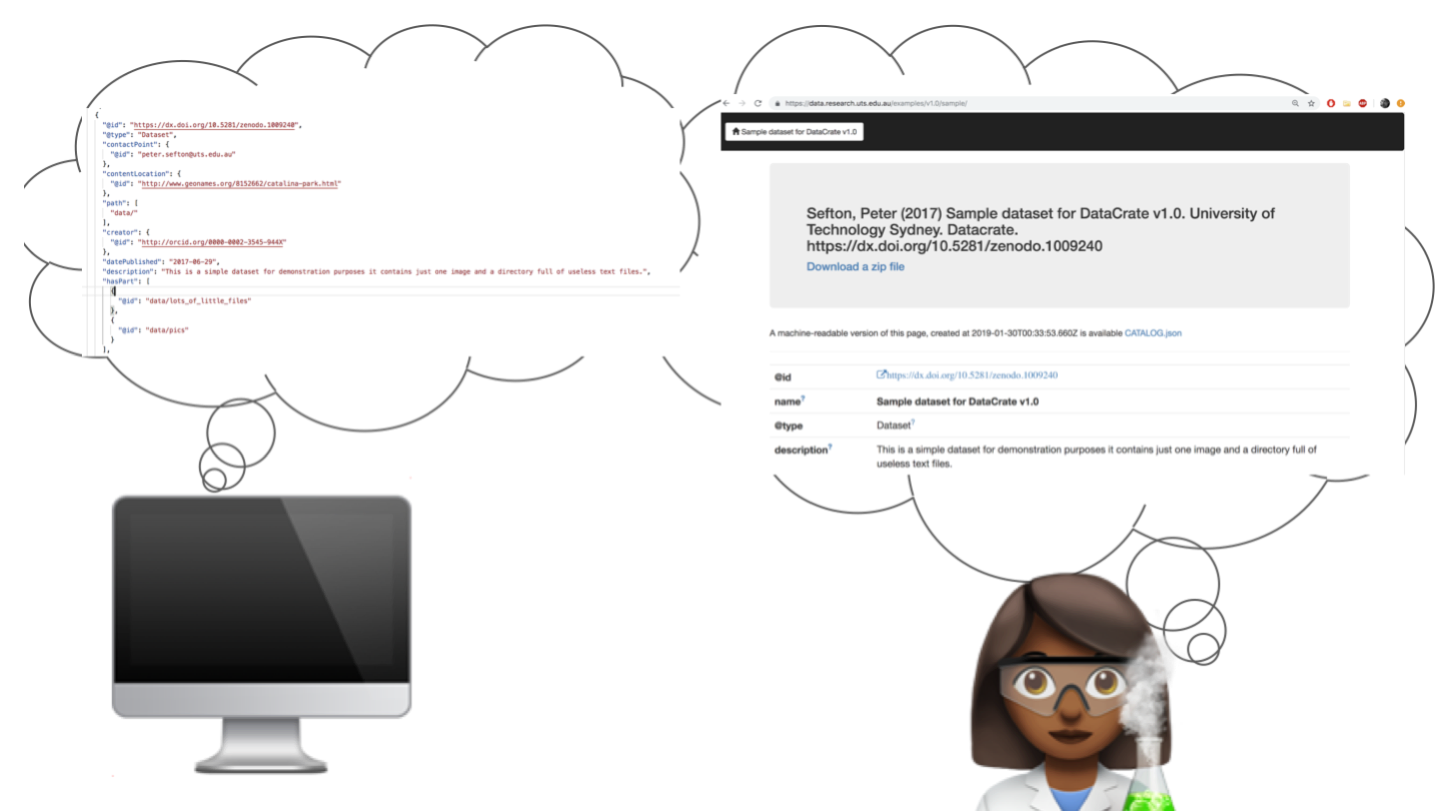

And at the same conference, I introduced the RO-Crate standards effort, which is a marriage between the DataCrate data packaging work we’ve been doing at UTS for a few years, and the Research Object project.

We created the Arkisto platform to bring together all the work we’ve been doing to standardise research data metadata, and to build a toolkit for sustainable data repositories at all scales from single-collection up to institutional, and potentially discipline and national collections.

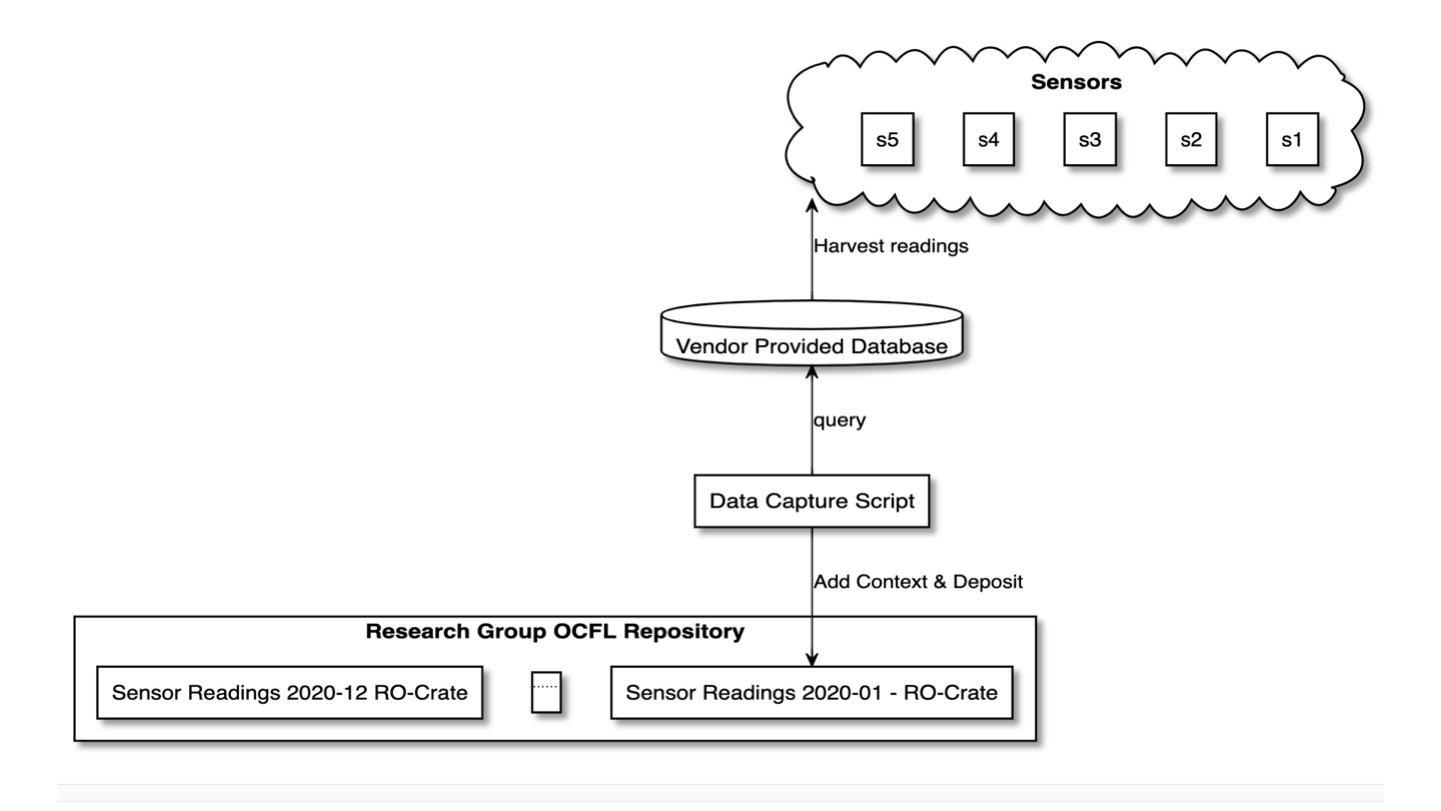

This is an example of one of many Arkisto deployment patterns you can read more on the Arkisto Use Cases page

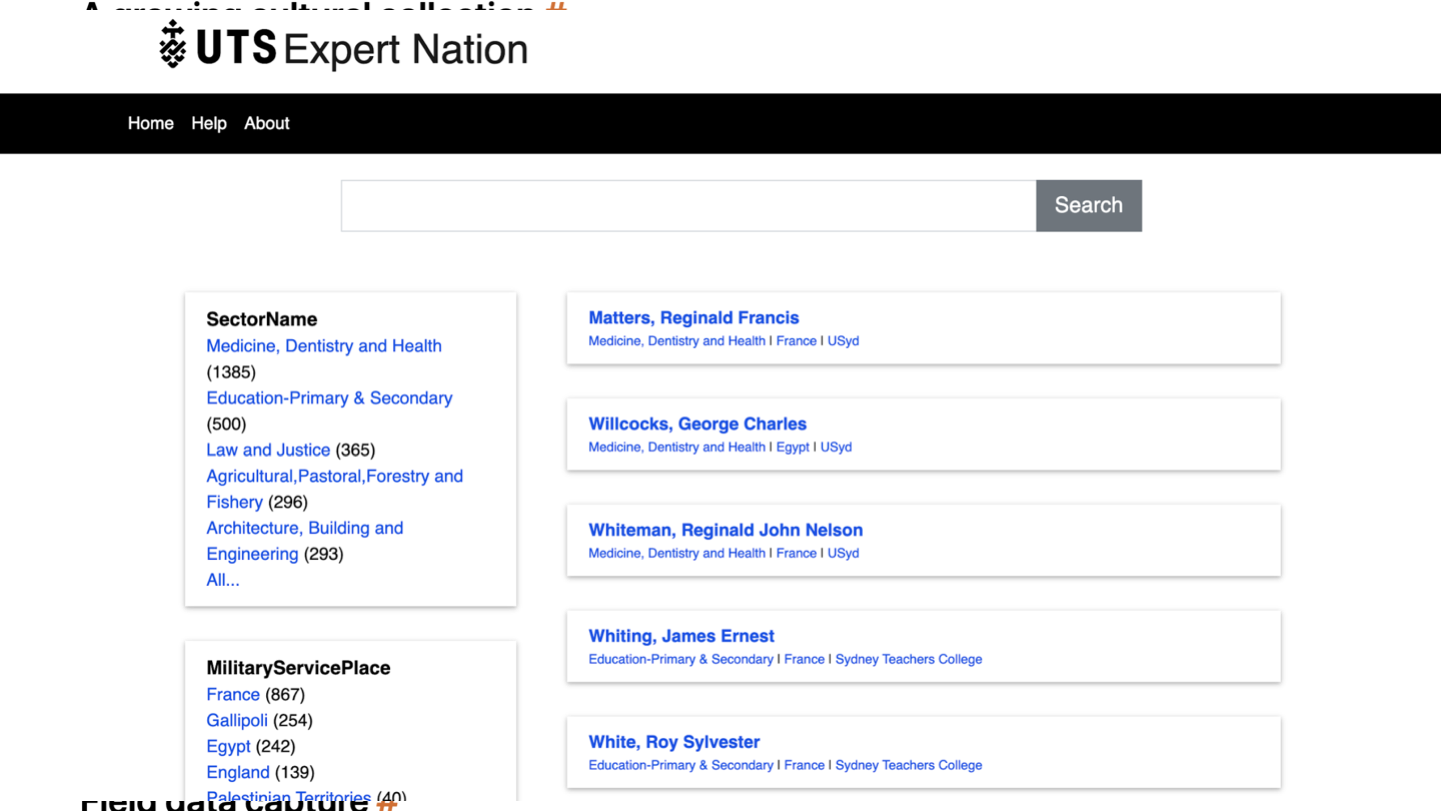

This is an example of an Arkisto-platform output. Data exported from one content management system into an archive-ready RO-Crate package, which can then be made into a live site. This was created for Ass Prof Tamson Pietsch at UTS. The website is ephemeral - the data will be Interoperable and Reusable (I and R from FAIR) via the use of RO-Crate.

Now to higher-level concerns: I built this infrastructure for my chooks (chickens) - they have a nice dry box with a roosting loft. But most of the time they roost on the roof.

We know all too well that researchers don’t always use the infrastructure we build for them - you have to get a few other things right as well.

One of the big frustrations I have had as an eResearch manager is that the expectations and aspirations of funders and integrity managers and so on are well ahead of our capacity to deliver the services they want, and then when we DO get infrastructure sorted there are organizational challenges to getting people to use it. To go back to my metaphor, we can’t just pick up the researchers from the roof and put them in their loft, or spray water on them to get them to move.

Via Gavin Kennedy and Guido Aben from AARNet Marco La Rosa and I are helping out with this charmingly named project which is adding data management service to storage, syncronization and sharing services. Contracts not yet in place so won't say much about this yet.

https://www.cs3mesh4eosc.eu/about EOSC is the European Open Science Cloud

CS3MESH4EOSC - Interactive and agile sharing mesh of storage, data and applications for EOSC - aims to create an interoperable federation of data and higher-level services to enable friction-free collaboration between European researchers. CS3MESH4EOSC will connect locally and individually provided services, and scale them up at the European level and beyond, with the promise of reaching critical mass and brand recognition among European scientists that are not usually engaged with specialist eInfrastructures.

I told Carina I would look outwards as well. What are we keeping an eye on?

Watch out for the book factory. Sorry, the publishing industry.

The publishing industry is going to “help” the sector look after it’s research data.

Like, you, know, they did with the copyright in publications. Not only did that industry work out how to take over copyright in research works, they successfully moved from selling us hard-copy resources that we could keep in our own libraries to charging an annual rent on the literature - getting to the point where they can argue that they are essential to maintaining the scholarly record and MUST be involved in the publishing process even when the (sometimes dubious, patchy) quality checks are performed by us who created the literature.

It’s up to research institutions whether this story repeats with research data - remember who you’re dealing with when you sign those contracts!

In the 2010s the Australian National Data (ANDS) service funded investment in Metadata stores; one of these was the ReDBOX research data management platform which is alive and well and being sustained by QCIF with a subscription maintenance service. But ANDS didn’t fund development of Research Data Repositories.

The work I’ve talked about here was all done with with the UTS team.

Comments

comments powered by Disqus