Skipped FridAI blogging last week because of Thanksgiving, but let’s get back on it! Top-of-mind today are the firing of AI queen Timnit Gebru (letter of support here) and a couple of grant applications that I’m actually eligible for (this is rare for me! I typically need things for which I can apply in my individual capacity, so it’s always heartening when they exist — wish me luck).

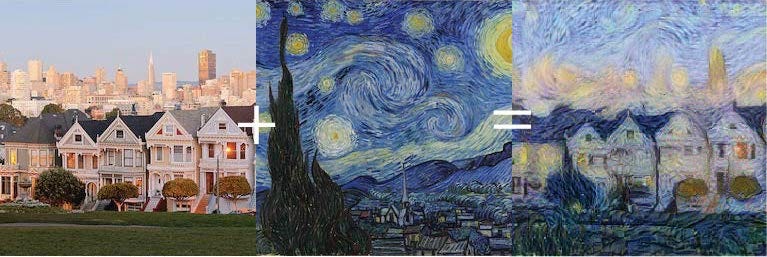

But for blogging today, I’m gonna talk about neural style transfer, because it’s cool as hell. I started my ML-learning journey on Coursera’s intro ML class and have been continuing with their deeplearning.ai sequence; I’m on course 4 of 5 there, so I’ve just gotten to neural style transfer. This is the thing where a neural net outputs the content of one picture in the style of another:

OK, so! Let me explain while it’s still fresh.

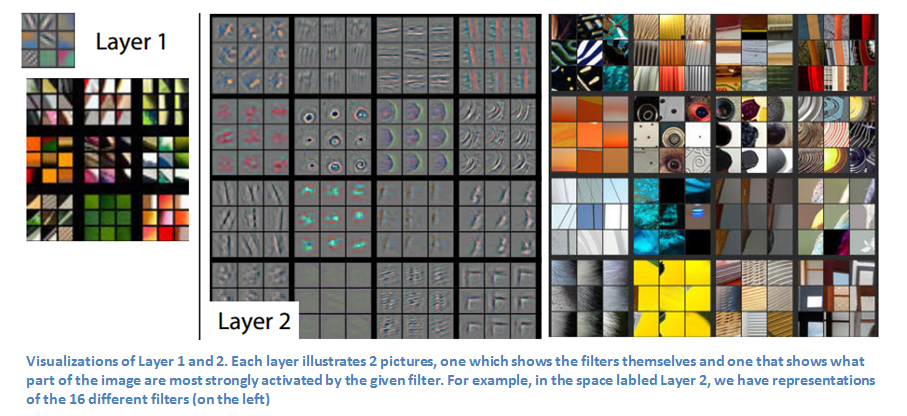

If you have a neural net trained on images, it turns out that each layer is responsible for recognizing different, and progressively more complicated, things. The specifics vary by neural net and data set, but you might find that the first layer gets excited about straight lines and colors; the second about curves and simple textures (like stripes) that can be readily composed from straight lines; the third about complex textures and simple objects (e.g. wheels, which are honestly just fancy circles); and so on, until the final layers recognize complex whole objects. You can interrogate this by feeding different images into the neural net and seeing which ones trigger the highest activation in different neurons. Below, each 3×3 grid represents the most exciting images for a particular neuron. You can see that in this network, there are Layer 1 neurons excited about colors (green, orange), and about lines of particular angles that form boundaries between dark and colored space. In Layer 2, these get built together like tiny image legos; now we have neurons excited about simple textures such as vertical stripes, concentric circles, and right angles.

So how do we get from here to neural style transfer? We need to extract information about the content of one image, and the style of another, in order to make a third image that approximates both of them. As you already expect if you have done a little machine learning, that means that we need to write cost functions that mean “how close is this image to the desired content?” and “how close is this image to the desired style?” And then there’s a wrinkle that I haven’t fully understood, which is that we don’t actually evaluate these cost functions (necessarily) against the outputs of the neural net; we actually compare the activations of the neurons, as they react to different images — and not necessarily from the final layer! In fact, choice of layer is a hyperparameter we can vary (I super look forward to playing with this on the Coursera assignment and thereby getting some intuition).

So how do we write those cost functions? The content one is straightforward: if two images have the same content, they should yield the same activations. The greater the differences, the greater the cost (specifically via a squared error function that, again, you may have guessed if you’ve done some machine learning).

The style one is beautifully sneaky; it’s a measure of the difference in correlation between activations across channels. What does that mean in English? Well, let’s look at the van Gogh painting, above. If an edge detector is firing (a boundary between colors), then a swirliness detector is probably also firing, because all the lines are curves — that’s characteristic of van Gogh’s style in this painting. On the other hand, if a yellowness detector is firing, a blueness detector may or may not be (sometimes we have tight parallel yellow and blue lines, but sometimes yellow is in the middle of a large yellow region). Style transfer posits that artistic style lies in the correlations between different features. See? Sneaky. And elegant.

Finally, for the style-transferred output, you need to generate an image that does as well as possible on both cost functions simultaneously — getting as close to the content as it can without unduly sacrificing the style, and vice versa.

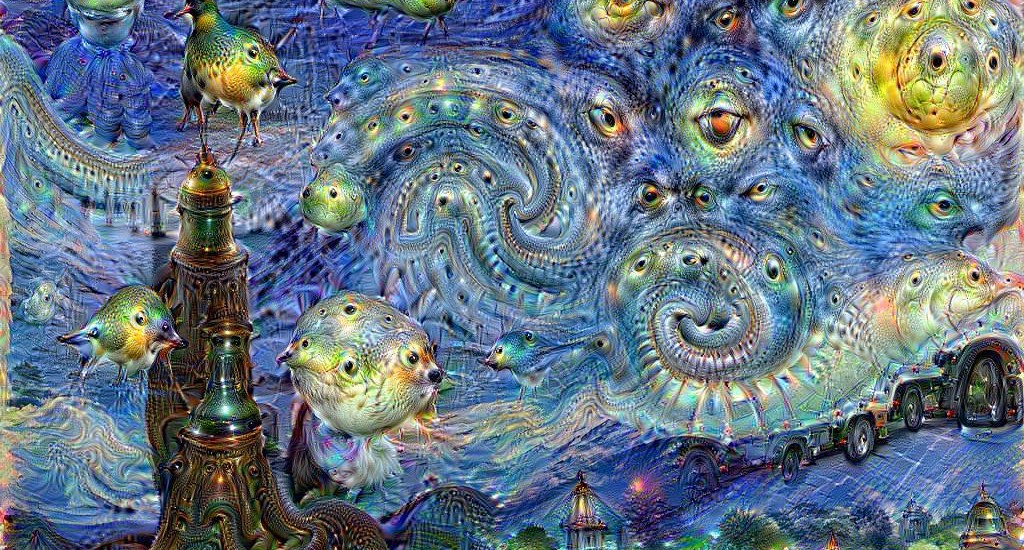

As a side note, I think I now understand why DeepDream is fixated on a really rather alarming number of eyes. Since the layer choice is a hyperparameter, I hypothesize that choosing too deep a layer — one that’s started to find complex features rather than mere textures and shapes — will communicate to the system, yes, what I truly want is for you to paint this image as if those complex features are matters of genuine stylistic significance. And, of course, eyes are simple enough shapes to be recognized relatively early (not very different from concentric circles), yet ubiquitous in image data sets. So…this is what you wanted, right? the eager robot helpfully offers.

I’m going to have fun figuring out what the right layer hyperparameter is for the Coursera assignment, but I’m going to have so much more fun figuring out the wrong ones.