VOL. 3, No. 3

MOOCs are re-operationalising traditional concepts in education. While they draw on elements of existing educational and learning models, they represent a new approach to instruction and learning. The challenges MOOCs present to traditional education models have important implications for approaching and assessing quality. This paper foregrounds some of the tensions surrounding notions of quality, as well as the need for new ways of thinking about and approaching quality in MOOCs.

Massive, open, online, courses (MOOCs) are online courses that facilitate open access to learning at scale. However, the interpretation and employment of the MOOC dimensions is not consistent, resulting in considerable variation in purpose, design, learning opportunities and access among different MOOC providers and individual MOOCs. The combinations of technology, pedagogical frameworks and instructional designs vary considerably between individual MOOCs. Some MOOCs reproduce offline models of teaching and learning, focusing on the organisation and presentation of course material while drawing on the Internet to open up these opportunities to a wider audience (Margaryan, Bianco, & Littlejohn, 2015). Others combine the opportunities presented by digital technologies with new pedagogical approaches and the flexibility of OER to design new learning experiences (Gilliani & Eynon, 2015).

The four dimensions of a MOOC – massive, open, online and course have been interpreted and implemented broadly:

Massive refers to the scale of the course and alludes to the large number of learners who participate in some MOOCs. Designing MOOCs involves considering how to disseminate content effectively and support meaningful interactions between learners (Downes, 2013) as well as how to devise new forms of education that enable high quality teaching and learning opportunities to occur at scale. Successful large-scale online education is expensive to produce and deliver (Ferguson & Sharples, 2014, 98). Also learning ‘though mass public media’ is limited in its effectiveness for several reasons. First, learning usually requires a high degree of agency and self-regulation by the learner (Ferguson & Sharples, 2014, 98; Milligan, Littlejohn & Margaryan, 2013). Second, learners are able to ‘drop in’ or ‘drop out’ of a MOOC, largely due to the open nature of courses where registration is open for the duration of the course. High dropout rates should be anticipated, since not all learners intend to complete the course or gain a certificate, bringing into question ‘drop out’ measures (Littlejohn & Milligan, 2015; Jordan, 2015). Third, MOOCs potentially attract diverse types of learners, which leads to complex design requirements, though the early MOOCs have tended to attract learners who have already participated in university education (Zhenghao et al., 2015). The large-scale access to learning MOOCs enable has implications not only for attracting and supporting large numbers of learners but also for designing the learning systems and developing the necessary pedagogy to support all of these different types of learners.

Open has multiple meanings in relation to MOOCs. It may refer to access; anyone, no matter his or her background, prior experience or current context may enrol in a MOOC. Open can also refer to cost; that is, a MOOC is available free of charge. A third meaning of open relates to the open nature of knowledge acquisition in a MOOC, including the employment of open educational resources (OER) or Open CourseWare (OCW) which is available under a Creative Commons licence. Open also relates to knowledge production and the opportunity for the remixing and reuse of the resources developed during a MOOC by the instructors and by the learners themselves to create new knowledge (Milligan, Littlejohn & Margaryan, 2013). Thus the philosophy of openness MOOCs were founded on is being challenged. The business models of platform providers, as well as the organisations that offer MOOCs, are experimenting with different pricing models. These include paying for certification, to sit a proctored exam, to receive course credit, or to work towards a degree (see for example http://tinyurl.com/zhmuuo6). The current open access model, which allows anyone to enrol in a MOOC, also is being challenged by the growing recognition that not everyone is adequately prepared with the necessary autonomy, dispositions and skills, to engage fully in a MOOC. The informal, largely self-directed nature of learning in MOOCs and the lack of support or interpersonal connections during a course, means that despite being open to anyone, learning opportunities are in reality restricted to those with the necessary knowledge, skills and dispositions to engage independently.

Online aspects of MOOCs increasingly are being blurred, as MOOCs are used in blended learning contexts to supplement in-person school and university classes (Bates, 2014; Bruff, Fisher, McEwen, & Smith, 2014; Caulfield, Collier, Halawa, 2013; Firmin et al., 2014; Holotescu, Grosseck, Cretu, & Naaji, 2014). In a review of the evidence surrounding the integration of MOOCs into offline learning contexts, Israel (2015) determines that while the blended approach leads to comparable achievement outcomes to traditional classroom settings, their use tended to be associated with lower levels of learner satisfaction. Downes (2013) suggests that for an online course to qualify as a MOOC no required element of the course should have to take place in a specific physical location. However, this requirement does not preclude additional offline interactions taking place. It is important to recognise that no online course is bounded to the online context. Learning is distributed across and informed by the multiple contexts of a learner’s life. How and why a learner engages with a MOOC is determined by both their current situation as well as their personal ontogeny. The learning context of a MOOC also is situated within and across the institutional contexts of the specific course creator and the platform provider. Recognising and addressing the multiple, and at times competing contexts in which each MOOC is situated is critical to discussions of quality.

Course conceptualisation varies across different MOOCs (Figure 1). According to Downes (2013) three criteria must be met for a MOOC to be regarded as a ‘course’: (1) it is bounded by a start and end date; (2) it is cohered by a common theme or discourse; and (3) it is a progression of ordered events. While MOOCs typically are bounded, this may be manifest in different ways. MOOCs initially started as structured courses, designed to parallel in-person, formal learning, such as university classes, with start and end dates. However, an increasing number of MOOCs are not constrained by specific start or end dates (Shah, 2015), facilitating a self-paced learning model. The length of courses also varies, with some constructed as a series of shorter modules, which may be taken independently or added together to form a longer learning experience. Patterns of learner engagement vary substantially in MOOCs. Conole (2013) suggests that participation can range from completely informal, with learners having the autonomy and flexibility to determine and chart their own learning journey, to engagement in a formal course, which operates in a similar manner to offline formal education. Reich (2013) has questioned whether a MOOC is a textbook (a transmitter of static content) or a course because of the conflicts that exist around confined timing and structured versus self-directed learning, the tension between skills-based or content-based objectives, and whether certification is included (or indeed achieved by learners). Siemens (2012) argues that the primary tension in MOOC conceptualisation is between the transmission model and the construction model of knowledge and learning. Rather than being viewed as a course, MOOCs could be conceptualised as a means by which learners construct and ultimately define their own learning (ibid).

Thus, the term ‘MOOC’ is being applied to such a wide range of learning opportunities that it provides limited insight into the educational experience being offered. The specific nature and composition of individual MOOCs are profoundly shaped and ultimately the product of its designers and instructors, the platform and platform provider, and the participants, who each bring their own frames of reference and contextual frameworks. Therefore, any discussion or attempt to quantify or qualify notions of quality in MOOCs requires the exploration of the complexities and diversity in designs, pedagogies, purposes, teacher experiences and roles, and participant motivations, expectations and behaviours present in MOOCs (Ross, Sinclair, Knox, & Macleod, 2014; Mackness, Mak, & Williams, 2010; Milligan, Margaryan, & Littlejohn).

Quality Indicators: Presage, Process and Product Variables

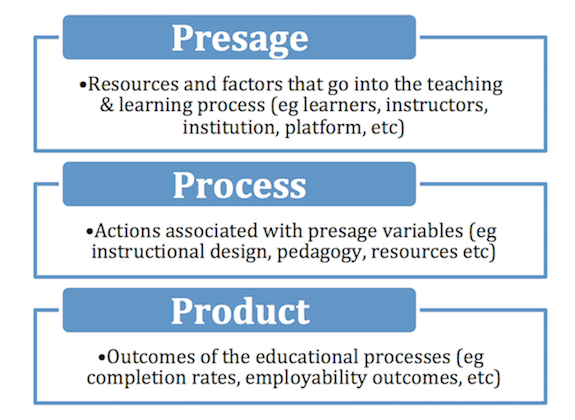

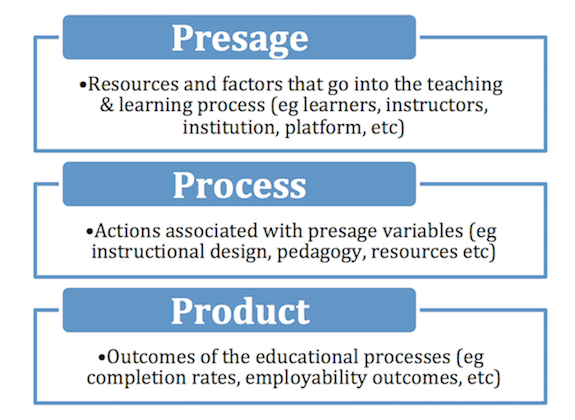

Quality measures must take into consideration the diversity among MOOCs as well as the various, and often competing, frames of reference of different stakeholders – learners, instructors, organisations and governments. Dimensions of quality in education have been structured and organised using a model developed by Biggs (1993), the ‘3P model’ (Gibbs, 2010). The model conceptualises education as a complex set of interacting ecosystems. To understand how a particular ecosystem (i.e., a MOOC) operates or its impact, the course is broken down into its constituent parts to examine how these parts relate to each other and how they combine to form a whole. It further is necessary to understand each MOOC ecosystem in relation to other ecologies. Biggs (1993) provides a useful model to examine the variables that can be measured to assess the quality of learning (see Figure 1).

Figure 1: Bigg’s 3P Model

Biggs divides each learning ecosystem into three types of variables – presage, process and product variables. Presage variables are the resources and factors that go into the teaching and learning process, including the learners, instructors, institution, and in the case of MOOCs the platform and platform provider. Process variables refer to the processes and actions associated with the presage variables, including instructional design, pedagogical approaches, and learning resources and materials. Product variables are the outputs or outcomes of the educational processes.

Presage Variables: Provider and Instructor; Learner; Platform

Conventional measures of presage variables include student to staff ratios both across an institution as a whole as well as within individual courses, the quality of teaching staff (often measured by job role or teaching qualification), the allocation of teaching funding, and the prior qualifications of students entering an institution or the acceptance rate.

MOOCs disrupt these traditional measures. They are non-selective, with open admission, and are frequently designed to have a single instructor teaching thousands of learners. This has resulted in calls for quality measures that recognise the diversity of learners and the openness of a course (Butcher, Hoosen, Uvalić-Trumbić, Daniel, 2013; iNACOL, 2011; QM, 2013; Rosewell & Jansen, 2014). These measures have important implications for process and product variables.

The MOOC platform plays an important role in determining the access, reach and nature of the course on offer. It further influences the instructional design, the technology that is available, and possible cost structures. Platforms are experimenting with new course structures, such as incorporating greater intentionality into course design by creating MOOCs with more practical outcomes for learners (Shah, 2015). Platform providers, such as Coursera, EdX and Futurelearn, are also experimenting with different cost structures, including offering pay-for credentialing and course credit opportunities and some providers have developed their own credentials.

The MOOC Provider can be anyone. The United States Government, the World Bank, the American Museum of Natural History, the Museum of Modern Art (New York), Google and AT&T are some of the many organisations that have run MOOCs. Though to date most MOOCs have been created by instructional designers in universities – first by a group of researchers in Canada (Downes, 2008; Downes, 2009), then by prominent institutions worldwide. This has led some commentators to suggest that MOOCs are merely an exercise in brand promotion (Conole, 2013). Others imply that MOOCs promote and reinforce distinctions between well-known, research universities and large corporations who are the producers of MOOCs (and controllers of knowledge), and less-affluent universities, which do not necessarily have the financial resources to produce MOOCs, and consequently are the consumers of MOOCs (Rhoads, Berdan, & Toven-Lindsey, 2013). Tensions and power imbalances between MOOC creators, the courses they develop, and the learning they support on the one hand, and learners on the other, is highlighted by many universities not offering credit for the MOOCs that they offer (Adamopoulos, 2013).

The use of high quality content resources and activities (Amo; 2013; Conole, 2013; Margaryan et al., 2015) and the opportunities for quality knowledge creation throughout the course of the MOOC (Guardia, Maina, & Sangra, 2013) are hallmarks of effective instructional design. Sound technology use is also important to the design and delivery of high quality learning experiences and opportunities (Amo, 2013; Conole, 2013; Guardia, Maina, & Sangra, 2013; Istrate & Kesten, 2015). However, Dillenbourg and colleagues (2014) warn of the tension between ‘edutainment’ and supporting deep learning, and the danger of providing ‘overpolished’ and entertaining materials without first considering the pedagogical approaches within which they are used. Research suggests the need for quality measures that evaluate both content and resource design and learner engagement with content and resources. There already exist a number of quality criteria that are used by universities for both accreditation and to maintain internal standards that could be extended, potentially in a modified form, to MOOCs (Dillenbourg et al., 2014). Examples of these frameworks that have been expanded to address MOOCs include the QM Quality Matters guide, iNAQOL, and OpenUpEd. These could be used in conjunction with new technology-enabled measures of learner engagement. One such example is the Precise Effectiveness Strategy, which purports to calculate the effectiveness of learners’ interactions with educational resources and activities (Munoz-Merino, Ruiperez-Valiente, Alario-Hoyos, Perez-Sanagustin, & Delgado Kloos, 2015).

Data suggest that the MOOC instructor has a significant impact on learner retention in MOOCs (Adamopoulos, 2013). Further research suggests that instructors’ participation in discussion forum activity and actively supporting learners during the running of a MOOC positively influences learning outcomes (Coetzee, Lim, Fox, Hartman, & Hearst, 2011; Deslauriers, 2011). Ross et al. (2014) argue for the importance of acknowledging the complexity of teacher positions and experiences in MOOCs and how these influence learner engagement.

Although there were around 35 million MOOC Learner registrations in 2015 (Shah, 2015), data suggest that MOOCs currently are not attracting as diverse a body of learners as hoped, with most learners having a degree level qualification (Christensen et al, 2013; Ho et al., 2014). However, there is considerable variety in learners’ motivations for enrolling in a MOOC. Common factors include: interest in the topic, access to free learning opportunities, the desire to refresh knowledge, opportunity to draw on world-class university knowledge, and to gain accreditation (Davies et al., 2014; Winthrup et al., 2015). Christensen et al. (2013) found that nearly half of MOOC students reported their reason for enrolling as “curiosity, just for fun”, while 43.9% cited the opportunity to “gain skills to do my job better.” Motivation determines how a person engages with a learning opportuniy both cognitively and behaviorally, and therefore, is a mediating factor in relation to other quality measures.

Low MOOC completion rates have been viewed as problematic. However passive engagement can be considered a valid learning approach, and is not always indicative of a lack of learning (Department for Business, Innovation and Skills, 2013). The majority of learners in MOOCs are not adhering to traditional expectations or learning behaviours. Consequently, they do not necessarily measure success as engaging with all of the content or completing the activities and achieving a certification of completion (Littlejohn, Hood, Milligan, & Mustain, 2016). Successful learning in MOOCs increasingly is learner driven and determined. As a result, traditional quality measures related to outcome variables (such as completion rates or grades) may be of limited relevance to MOOCs (Littlejohn & Milligan, 2015).

Confidence, prior experience and motivation have been found to mediate engagement (Milligan, Littlejohn, & Margaryan, 2013). It further has been suggested that learners’ geographical location affects accessibility to MOOCs as well as interest in topics (Liyanagunawardena, Adams, & Williams, 2013), with demographic information able to be used as an intermediary characteristic to explain behaviour in a MOOC (Skrypnyk, Hennis, & Vries, 2014). Further research has identified a relationship between learners’ behaviour and engagement, and their current contexts, including occupation, (Hood, Littlejohn, & Milligan, 2015; Wang & Baker, 2015; de Waard et al., 2011), as well as a relationship between learners’ learning objectives and their learning outcomes (Kop, Fournier, & Mak, 2011). Learners’ prior education experience also has been found to influence their retention in a MOOC (Emanuel, 2013; Koller et al., 2013; Rayyan, Seaton, Belcher, Pritchard, & Chuang, 2013) and their readiness to learn (Bond, 2015; Davis, Dickens, Leon, del Mar Sanchez Ver, & White, 2014; Kop et al. 2011), with more experienced learners typically finding it easier to navigate the unstructured nature of learning in a MOOC (Lin, Lin, & Hung, 2015).

When discussing and assessing quality in MOOCs it is necessary to situate the MOOC, the learning opportunities it provides and individual learners within the multiple ecosystems in which they interact. One of the disrupting forces in a MOOC is that it provokes a move in thinking about quality from the perspective of the instructor, institution and platform provider to the learner. Therefore, establishing reliable measures of confidence, experience and motivation, which extend beyond self-report, could provide a more accurate view of quality than conventional learner metrics.

Process Variables – Pedagogy and Instructional Design

The flexibility of participation and the self-directed nature of engagement, which enables learners to self select the learning opportunities and pathways they follow when participating in a MOOC (de Boer et al., 2014) necessitates the re-operationalisation of many process variables. Questions emerge regarding the balance between structure (intended to provide direction) and self-regulation, between broadcast or dialogue models of delivery, whether MOOCs should offer edutainment or deep learning opportunities, and whether and how to promote homophily or diversity in learners’ engagement and participation.

MOOC Instructional Design and the use of different tools and resources influence engagement and support learning in MOOCs (Margaryan et al, 2015). Outcomes measures of retention and completion are often used as proxies for learning when assessing process variables. However, these are not necessarily accurate measures of learning in MOOCs, where participation is often self-directed, with learners following individual, asynchronous pathways for which there is no correct or prescribed route (de Boer et al., 2014). The diversity of learners’ goals and motivations for taking a MOOC must be addressed within its instructional design, allowing for learner autonomy (Mackness, Waite, Roberts, & Lovegrove, 2013) and flexible learning patterns. However, this flexibility must be situated within an overarching, coherent design, which incorporates adequate support structures. Daradoumis and colleagues (2013) and Margaryan et al (2015) found that while MOOCs allow for individual learning journeys, there is a problematic lack of designed customisation and personalisation in MOOCs, which responds to learner characteristics. Designing a MOOC based on participatory design and activity-based learning facilitates learning that is relevant to learners (Hew, 2014; Istrate & Kestens, 2015; Mor & Warburton, 2015).

There are strong links between the diversity of learners (presage variable) MOOCs can attract and the need to incorporate differentiated pathways and learner-centred designs. Learner-centred design takes into consideration the diversity of the learner population and the need to provide learning activities that cater to and support different learning styles and needs (Alario-Hoyos, Perez-Sanagustin, Cormier, & Delgado-Kloos, 2014; Guardia, Maina, & Sangra, 2013; Hew, 2014; Margaryan et al., 2015). The design should offer opportunities for personalised learning (Istrate & Kestens, 2015) as well as and drawing on learners’ individual contexts and previous experience (Scagnoli, 2012).

It also is important to support and scaffold learning, by making sure support structures are integrated into the MOOC design (Skrypnyk, de Vies, & Hennis, 2015). Learning supports can be developed through the incorporation of accessible materials and instructors who actively contribute to and support learners (Hew, 2014), as well as through opportunities for peer assistance (Amo, 2013; Guardia et al., 2013). However, the ration of instructors to learners in MOOCs raises concern (Dolan, 2014; Kop, Fournier, & Mak, 2011). Learning and data analytics increasingly are being used to guide the learner and instructor, with tutors receiving predictive analytics about each of their students and using this data to target their support (Rientes et al., 2016) or learners being ‘nudged’ to focus attention (Martinez, 2014).

Interaction and collaboration encompass both instructor-learner interactions and learner-to-learner collaborations. A relationship has been identified between learners’ participation in discussion forums and completion (Gillani & Eynon, 2014; Kizilcic et al., 2013; Sinha et al., 2014), though the reasons for this are uncertain. Analysis of discussion forum posts indicates a wide variation in the content and topics (Gillani et al., 2014). However, a correlation has been detected between the intensity of activity and course milestones (ibid). Higher performing students engage more frequently in MOOC discussion forums; however, their interactions are not restricted to other high-performing students. Discussion forums also provide an important information source for instructors about their students and how they are engaging with the content (Rosé, Goldman, Zoltners Sherer, & Resnick, 2015).

Opportunities for strategic use of feedback (from both peers and instructors) are important elements of effective instructional design (Alario-Hoyos, 2014; Amo, 2013; Conole, 2013; Margaryan et al., 2015). Receiving targeted, relevant informative feedback in a timely manner is important for supporting students’ learning (Hattie, 2009). However, in their analysis of 76 MOOCs Margaryan and colleagues (2015) found there were few opportunities for high quality instructor feedback. There is evidence of the predictive power of data and learning analytics to offer insight into learning (Tempelaar, Rientes, & Giesbers, 2015). New techniques are being developed, including technology for analysing discussions for learning (Howley, Mayfield, & Rosé, 2013), the formation of discussion groups (Yang, Wen, Kumar, Xing, & Rosé), and indicators of motivation, cognitive engagement and attitudes towards the course (Wen, Yang, & Rosé, 2014a, 2014b). Developing measures capable of capturing interactions quantitatively as well as qualitatively, will facilitate a richer understanding of how interactions and collaboration support student learning and engagement, as well as how they contribute to the fulfilment of individual learners’ goals. Research has investigated how formative and summative feedback can be generated (Whitelock, Gilbert, & Wills, 2013), how MOOCs could operate as foundational learning experiences before traditional degree courses (Wartell, 2012) and how and whether university credit might be offered by more MOOCs (Bellum 2013; Bruff, Fisher, McEwan, & Smith, 2013).

Learning analytics could be used to better personalise and tailor MOOCs to learners (Daradoumis, Bassi, Xhafa, & Caballe, 2013; Kanwar, 2013; Lackner, Ebner & Khalil, 2015; Sinha et al., 2014; Tabba & Medouri, 2013). Developing quality indicators that can be used in conjunction with learning analytics could provide powerful measures of pedagogically effective technology use in MOOCs.

Product Variables – Learners and Learning

In conventional education the most commonly used indicators of learning quality are progression and completion rates and employment statistics (Gibbs, 2010). However, the use of these indicators as MOOC quality measures is highly problematic, since completion is not always the goal of individual learners (Littlejohn et al., 2016) and therefore not an appropriate measure of the quality of learning on its own.

Particular learner behaviours – engagement in discussion forums (Gillani et al., 2014), completion of weekly quizzes (Admiraal et al., 2015), and routine engagement over the course of a MOOC (Loya, Gopal, Shukla, Jermann, & Tormey, 2015; Sinha et al., 2014) correlate positively with completion levels. As such they can be interpreted as facilitators of the learning process. However, completion is not synonymous with satisfaction, the achievement of goals, or learners’ perceptions of successful learning (Koller, Ng, Do, & Chen, 2013; Littlejohn et al., 2016; Wang & Baker, 2015). Further evidence indicate that learners who ‘lurk’, engage passively, or do not complete the full course have as high overall experiences of a MOOC as those learners who completed it (Kilicec et al., 2013; Milligan, et al, 2013).

There is a need to measure other product variables that reflect the diverse and contextualised patterns of participation and the range of outcomes in MOOCs. These should include the individual motivations and goals of learners, both as they are conceptualised at the start of a course as well as how they develop over time. This will enable the development of differentiated product variables as well as enabling the tracking of individual learner’ engagement with MOOC resources, assessment – both formative and summative – and feedback, interaction with others, and patterns of communication. This profile of individual learners should also include background information on learners, including demographic data, prior learning experiences, and behavioural data.

Using learning analytic techniques to analyse combinations of demographic details, academic and social integration, and social and behavioural factors, together with within course behaviour can predict different types of performance (Agudo-Peregrina et al., 2014; Credé & Niehorster, 2012; Marks, Sibley, & Arbaugh, 2005; Macfadyen & Dawson, 2010; Tempelaar et al., 2015). Other useful product variables include post-MOOC outcomes, such as career progression (Zheng et al., 2015), network outcomes and future study.

Indicators of MOOC Quality

Dimensions of MOOC quality depend largely on two variables: the MOOC’s purpose and the perspective of the particular actor. The diversity of learners in a MOOC, the range of purposes for which MOOCs are designed, and the various motivations individual learners have for engaging with a MOOC means that it is not possible to identify a universal approach for measuring quality. Furthermore, the difficulties in operationalising many of the dimensions of quality – either quantitatively or qualitatively – makes assessments of quality challenging.

Daniel (2012) suggests that MOOCs could be evaluated by learners and educators, with the aim of producing league tables that rank courses (there are several examples of this already happening). He suggests that poorly performing courses would either disappear due to lack of demand, or would undertake efforts to improve quality. Uvalić-Trumbić (2013) suggests assessing MOOCs against the question ‘What is it offering to the student?’. However, given the diversity among MOOC participants, the answer to this question would differ for each student.

Another route forward is to equate quality with participation measures (Dillenbourg et al., 2013). The primary focus would be on assessments of the learning outcomes of individual participants, thereby placing the learner at the centre of measures of quality. This is in keeping with the growing focus in the research on developing multiple measures of learner behaviour, motivations and engagement, through the employment of various learning and data analytic techniques. Dillenbourg and colleagues (2013) suggest that multiple assessments of individual learners participation measures could also inform the evaluation of cohorts of learners, instructional design decisions and the learning outcomes that result from them, and instructors, whose quality is dependent on outcomes of the course.

This focus on the learner and the relationship between product and process variables seems to be central to the quality of MOOCs. If the learning outcomes – as measured through a range of variables and indicators – are perceived to represent a high quality learning experience, then by implication the process variables – the various dimensions of pedagogy and instructional design – are appropriate in this context. However, if the learners’ experiences and resulting learning outcomes are not positive, then the process variables may be deemed as less suitable to that particular context, even if conforming to a pre-developed list of guidelines. The aim, therefore, is to ensure that the gap between initial expectations and the final perceptions of the delivered learning experience is as small as possible. That is, the process variables lead to the desired product variables and outcome measures.

Rather than coming a single conclusion about quality in MOOCs, this paper has attempted to explore some of the tensions and challenges associated with quality and to identify a range of variables that can be used to measure quality in MOOCs. It is clear that conventional measures and indicators of quality are not always appropriate for MOOCs. Similarly, given the diversity among MOOC offerings, it is unlikely that there is one clear route forward for assessing quality. Biggs’ 3P model provides a framework for identifying variables and measures associated with quality and for exploring the relationships between them. The aim here was to explain the possible uses of each variable, and where possible, to identify potential measures and instruments that can be used to measure them.

Quality is not objective. It is a measure for a specific purpose. In education, purpose is not a neutral or constant construct. The meaning and purpose ascribed to education shifts depending on the context and the actor, with governments, institutions, instructors, and learners approaching education from different viewpoints and consequently viewing quality through different lenses. Since MOOCs shift agency towards the learner, there is a need to foreground learner perspectives, using various measures of learner perceptions, behaviours and actions, and experiences as the foundation for assessing quality.

Acknowledgements

The authors extend their thanks to the Commonwealth of Learning and Dr Sanjaya Mishra for supporting the project on “Developing quality guidelines and a framework for quality assurance and accreditation of Massive Open Online Courses”. Full reports of the project are published as two separate publications by COL, which can be accessed at http://oasis.col.orgAuthors

Dr. Nina Hood is a research fellow at the Faculty of Education at the University of Auckland. Her research is focused on the role that digital technologies can play in supporting and enhancing education, and in particular facilitating professional learning opportunities and knowledge mobilisation. Email: n.hood@auckland.ac.nz