When mom told you to make eye contact during conversation, you may have been getting more than a lesson in good manners.

Kathleen Eberhard, assistant professor of psychology at theUniversityofNotre Dame, is using eye-tracking technology to explore how we move our eyes to gauge if another person understands what were saying. Her research is being driven by a theory of natural language use developed byStanfordUniversityprofessor Herbert Clark.

He argues that conversation is like any joint cooperative social activity,says Eberhard, who is assisted in her lab by graduate student Sarah Boyd and several undergraduates. Such activities are undertaken to reach a common goal, whether that be to dance a waltz or play catch. In the case of conversation, the goal would be the successful sharing of information, meaning speakers should check for listener feedback at various points in their telling of a story.

Eberhards project to investigate this hypothesis is an innovative one.

As far as I know,she says,while there have been a number of previous studies that used videotapes in face-to-face conversations, none of them have used an eye-tracker, and so they dont get a precise measurement of exactly where the speaker is looking.

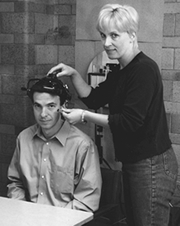

Each trial of the experiment requires two subjects. The first reads a seven-page Brothers Grimm folktale and, after taking a multiple choice quiz on it, puts on head gear that, thanks to a harmless infrared beam, will track his or her eye movements while telling the story to the other subject. A video camera is also focused on the speaker so that Eberhard can follow eye movements relative to his or her body language. The listener is asked to take the same quiz to ensure that he or she pays attention to the speaker.

Because the story is organized into very recognizable scenes and sub-scenes, Eberhard says one would expect the speaker to consider the information leading up to thesebreaksto be the most important ideas to convey.

The question of interest is going to be: Given points where there are major breaks, are we seeing the speaker then look at the listeners face for evidence of feedback, and does the kind of feedback that the listener provides reflect (how crucial the detail is to) the story?

Boyd has been going through the footage of the conversations, noting every listener reaction, from head nods tom-hmms,and transcribing all of the dialogue between subjects. With such a thorough data set, Eberhard also wants to explore if there is any relation between where we look on a listeners face and the feedback we expect to receive, as well as if uttering anuhhorummcorresponds with a look away from the person to whom we are talking.

Eberhard and her team of students presented their preliminary findings at the Third International Workshop on Language Production atNorthwesternUniversityin August. Among other results, they found that speakers looked at their listenersfaces even more frequently than hypothesizedan average of once every three secondswith approximately 81 percent of these looks occurring after they conveyed one of the storys basic points. An average of 73 percent of the listenersacknowledgements of understanding occurred when speakers looked at their faces.

These findings support the proposal that, in a narrative dialogue, a primary purpose of a speakers look at the listeners face is to obtain evidence that the listener understands the meaning of the utterance,Eberhard says.

Once completed, she expects her teams research to have a number of practical uses, ranging from the development of therapies for patients with brain damage to technological applications. A better idea of how and when we expect someone to indicate they understand us would allow designers to get closer to creating computers that respond to dialogue more like humans.

TopicID: 19244