Considering Outreach Assessment: Strategies, Sample Scenarios, and a Call to Action

In Brief:

How do we measure the impact of our outreach programming? While there is a lot of information about successful outreach activities in the library literature, there is far less documentation of assessment strategies. There may be numerous barriers to conducting assessment, including a lack of time, money, staff, knowledge, and administrative support. Further, many outreach activities are not tied back to institutional missions and event goals, meaning they are disjointed activities that do not reflect particular outcomes. High attendance numbers may show that there was excellent swag and food at an event, but did the event relate back to your missions and goals? In this article, we examine the various kinds of outreach that libraries are doing, sort these activities into six broad categories, explore assorted assessment techniques, and include a small survey about people’s experience and comfort with suggested assessments. Using hypothetical outreach scenarios, we will illustrate how to identify appropriate assessment strategies to evaluate an event’s goals and measure impact. Recognizing there are numerous constraints, we suggest that all library workers engaging in outreach activities should strongly consider incorporating goals-driven assessment in their work.

by Shannon L. Farrell and Kristen Mastel

Introduction

During times of competing interests and demands for funding, where do libraries stand? All types of libraries are feeling the pressure to demonstrate return on investment and the value of libraries to their constituents and communities. In recent reports gathered by the American Library Association, public libraries have demonstrated that for every dollar invested, three dollars (or more) of value are generated (Carnegie Mellon University Center for Economic Development, 2006; Bureau of Business and Economic Research, Labovitz School of Business and Economics, University of Minnesota Duluth, 2011). In academic libraries, the focus has been on demonstrating correlations between library use and students’ grades, retention, and graduation rates (Oakleaf, 2010; Soria, K. M., Fransen, J., & Nackerud, S., 2013; Mezick, 2007), illustrating that student library use is positively correlated to higher grade point averages and improved second-year retention and four-year graduation rates. Most of these methods utilize quantitative measures such as gate counts, circulation statistics, and other count-based data. Although these techniques tell a portion of the story of libraries’ impact, they do not tell the whole story. Many libraries use various kinds of outreach to reach and engage with users but numbers alone cannot capture the impact of outreach programming. Other types of measures are needed in order to assess participants’ perception and level of engagement.

In reviewing the library literature, we found many articles that explain “how-to” do a specific type of outreach. However, these articles rarely discuss how to assess the outreach activities beyond head counts, nor do they examine how the activities were tied to particular goals. Outreach is most effective when tied to institutional goals. To measure success we must begin with a goal in mind, as this can help staff prioritize activities, budgets, and time. Zitron (2013) outlines a collage exercise that can help create an outreach vision that is mapped to the institution’s mission and specific goals. Once the foundation is laid, you can create activities that fulfill the goals, along with alternative plans to anticipate various situations. In their paper about creating video game services, Bishoff et al. (2015) demonstrate how their institutional environment had a large impact on the kinds of services that were developed. They discovered that their “just for fun” gaming events were neither well received nor tied to the libraries’ goals. After some assessment and reflection, they shifted their focus to student and faculty-driven activities, such as a showcase of gaming technology and discussions related to trends in the gaming industry and gaming-related research.

Without goal-driven activities and assessment, how is the time, money, and energy justified? Conducting assessment involves several challenges, including those related to time and budget constraints, staffing levels, and staff education. However, there are many strategies that can be employed, ranging from those that are quick and easy to those that are more time-consuming and complex. In this paper, we will outline the various categories of outreach that are prevalent in libraries, compile recommended assessment strategies, and using sample scenarios, we will illustrate how to use various assessment strategies in conjunction with defined goals. Lastly, we will explore people’s comfort and familiarity with various assessment techniques through an informal survey, leaving you with a call to action to employ assessment in your daily work and outreach efforts.

Outreach Categories

There are as many definitions for library outreach as there are creative activities and strategies. While there is no one definition to define outreach within the library community, there are certainly themes across the profession. Schneider (2003) identifies three factors to consider regarding outreach, “whether a need is expressed from outside the academy, whether they see their mission as an invitation to pursue an action on their own accord, or whether they construct a form of outreach in response to a specific problem or crisis.” In addition, it could be said that all outreach involves marketing library services, collections, and spaces. “Library marketing is outreach. It is making people aware of what we can do for them, in a language they can understand.. [we] need to tell people we’re here, explain to them how we can help, and persuade them to come in through the doors, virtual or physical“ (Potter, 2012). Emily Ford (2009) states, “Instead of integrating library promotion, advocacy, and community-specific targeted services, we have left ‘outreach’ outside of the inclusive library whole to be an afterthought, a department more likely to get cut, or work function of only a few, such as your subject librarians.” Outreach should not fall on the shoulders of just one individual. In order to be successful, a team-based approach is needed to generate and execute strategies. Gabrielle Annala (2015) affirms Ford, and reflects that outreach is too broad of a term, and that we should instead describe exactly what we are doing in terms that are meaningful to our target audience. She states that outreach is actually a compilation of activities from advocacy, public relations, publicity, promotion, instruction, and marketing. Hinchliffe and Wong (2010) discuss how the six dimensions of the “wellness wheel” (intellectual, emotional, physical, social, occupational, and spiritual) can help libraries form partnerships across departments and serve patrons by considering their whole selves when developing resources and services. In this article, we view outreach as activities and services that focus on community and relationship-building, in addition to marketing collections and services to targeted audiences.

There are numerous kinds of outreach activities that occur in libraries. In order to get an idea of the range of activities, we looked in the library literature, surveyed colleagues, and examined lists of past and upcoming events on a handful of academic and public library websites. Although there are a wide range of activities, they appear to fall under a few broad categories. The variety of outreach activities are reflected in the survey conducted by Meyers-Martin and Borchard (2015) of programming provided by academic libraries during finals. Meyers-Martin and Borchard looked at the survey respondents’ budgets, who they partner with, their marketing, and how much time they spent coordinating the activities. Here we build on Meyers-Martin and Borchard’s groupings and propose some broad categories for outreach activities. Activities were categorized based on what we interpreted as the primary intention. We acknowledge that most outreach crosses numerous categories, so they cannot be viewed singularly, but rather are themes that are touched upon during marketing, public relations, instruction, and programming. Each category is accompanied by some illustrative examples. Some of the examples we use could theoretically be placed in another category (or multiple categories), based on the intention and goals of the activity.

Knowing the kinds of outreach that are being used in libraries helps us to think about the kinds of assessment measures that might be appropriate for various activities. Further, institutions can use the following groupings as a starting point to evaluate if they are employing diverse strategies or are targeted in one particular area.

Category 1: Collection-Based Outreach

Activities in this category are those that are linked to a library’s collection, or parts of a library’s collection. Despite this, there is a lot of variety in the kinds of outreach that can fall within this category, ranging from library-led book clubs, to author talks, to scavenger hunts (where patrons are asked to find specific items within the library). Other examples of collection-based outreach include: summer reading programs, community read programs (where members of a particular community all read the same book at the same time), “blind date with a book” (where books are wrapped up in paper to conceal their titles and placed in a display to be checked out), exhibits (physical or online), and pop-up libraries (portable libraries, with materials from the home library’s collection). Numerous libraries have built community around “one book” programs (Evans, 2013; Hayes-Bohanan, 2011; Schwartz, 2015) and summer reading programs (Dynia, Plasta, and Justice, 2015; Brantley, 2015), along with bringing materials outside the library walls (Davis et. al., 2015).

Category #2: Instruction & Services-Based Outreach

Activities in this category focus on presentations and public guidance regarding library services. Typical activities that would be placed in this category are: workshops (e.g. how to access new e-book platforms), classes (e.g. data management classes that are linked to library data management services, Carlson et. al., 2015), and roving reference (where librarians go to alternate locations to answer reference questions; Nunn and Ruane, 2015; Henry, Vardeman, Syma, 2012).

Category #3: “Whole Person” Outreach

Zettervall (2014) coined the term “whole person librarianship” and states that it “is a nascent set of principles and practices to embed social justice in every aspect of library work.” Activities in this category are primarily concerned with helping people on an individual level and to help them make personal progress in some aspect of their life. Many of these activities revolve around health-based activities (e.g. health screenings, crisis support) and stress reduction programs (e.g. animal therapy, yoga), but many other services can fall in this category as well (including homework help, job search assistance, and tax preparation).

While many libraries in particular have implemented animal therapy during finals stress relief (Jalongo, and McDevitt, 2015), or introduced programs to develop literacy skills (e.g. K-12 students reading aloud to dogs; Inklebarger, 2014), libraries have also been on the front lines supporting their community during a crisis, such as providing a safe refuge for school children in Ferguson (Peet, 2015), or providing internet access, tablets, and charging stations after Hurricane Sandy (Epps and Watson, 2014).

Category #4: Just For Fun Outreach

Activities in this category are those that are typically “just for fun”. They focus on providing a friendly or welcoming experience with the library and/or to redefine the library as place. Examples include: arts and crafts activities, Do-It-Yourself (DIY) festivals (Hutzel and Black, 2014; Lotts, 2015), concerts, games/puzzles (Cilauro, 2015), and coloring stations (Marcotte, 2015).

Category #5: Partnerships and Community-Focused Outreach

Activities in this category are primarily focused on creating partnerships and working with community groups. This may also include working to cross-promote services with other organizations, promoting library collections/services at community-focused events, or providing space for external groups (e.g. anime or gaming clubs) within the library. Partnering with outside organizations can aid in expanding the library’s reach for non-library users or underserved populations, such as partnering with a disability center for digital literacy training (Wray, 2014), or improving access to health information through an academic-public library partnership (Engeszer et. al., 2016).

Category #6: Multi-Pronged Themed Events and Programming

Activities in this category take place on a large-scale, and usually involve numerous activities and levels of support, frequently over several days. Many of these events involve a combination of collection-based outreach, instruction, and events that are “just for fun”, among other things. Examples include: National Library Week (Chambers, 2015), Banned Books Week (Hubbard, 2009), History Day (Steman and Post, 2013), and college and university orientation events (Boss, Angell, and Tewell, 2015; Noe and Boosinger, 2010; Johnson, Buhler, & Hillman, 2010).

Assessment strategies

We hope that the above categories will help you reflect on the types of outreach that you provide in order to develop effective outreach assessment strategies. There are a variety of assessment methods that can be employed to determine if your outreach activity or event was successful in terms of your pre-determined goals. Some methods may take more time, involve more staff, or necessitate having a larger budget. Some are only appropriate under certain conditions. Further, different methods will yield different types of data, from hard numbers (quantitative data), to quotes or soundbites (qualitative data), to a combination of the above. There is no one perfect method; each has its pros and cons.

When determining which method to use, you need to keep in mind what you are hoping to learn. Are you primarily interested in the number of people who attended? Do you want to know if particular demographic groups were represented? Do you want to know how satisfied participants were with the event? Do you want to know if people associated the event with the library? Do you want to know if participants walked away knowing more about the library? Each of these questions would need different types of data to yield meaningful answers, and thus, would require thinking about data collection ahead of time. In addition, keep in mind the audience for your outreach activity and let that inform your assessment strategies (e.g. outreach services off-site or with an older population might warrant a postcard survey whereas a teen poetry slam might involve gathering social media responses).

When developing an assessment strategy it is best not to work in a vacuum. If it all possible, you should solicit people’s feedback on whether the strategy fits the goal. Depending on the scale of the event or activity, you may need to solicit help or employ volunteers to gather data. Depending on the amount or type of data generated, you may need help analyzing and/or coding data, generating statistics, or producing charts and graphs.

We have compiled several assessment strategies here based on an Audiences London (2012) report that discusses assessment strategies related to outdoor events and festivals. Although this report was not library-focused, we believe these strategies can be utilized by libraries who conduct outreach.

Strategies:

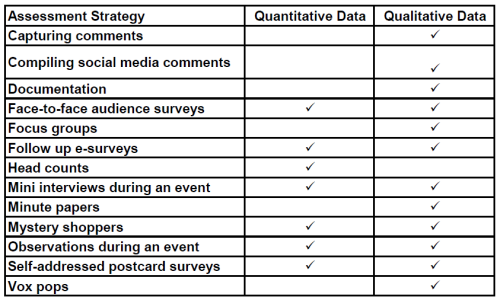

Definitions of each of the strategies follows. Table 1 illustrates the kind of data that each strategy generates.

- Capturing comments: Collect thoughts of motivated participants; can occur on paper, white boards, or other media (e.g. Bonnand and Hanson, 2015).

- Compiling social media comments or press cuttings: Gather coverage of an event through social media, newspapers, and other media outlets (e.g. Harmon and Messina, 2013; Murphy and Meyer, 2013).

- Documentation: Capture photographs and anecdotes in a document or report to paint an overall picture of an event.

- Face-to-face audience surveys: Administer questionnaires to participants at the event, led by an interviewer (e.g. Wilson, 2012).

- Focus groups: Interview participants in groups, following the event (e.g. Walden, 2015).

- Follow up e-surveys: Collect email addresses during the event and distribute an e-survey via collected emails following the event.

- Head counts: Count the number of people present at an event.

- Mini interviews during the event: Conduct very short interviews during the event, led by a staff member or volunteer. The type of interview conducted can vary, whether it asks an open-ended question or has a set list of questions to be answered.

- Minute papers: Ask participants to take one minute to write down an answer to a question. As the name suggests, it does only take one minute and provides rapid feedback. The time involved is in generating a meaningful question and in analyzing and coding the results (e.g. Choinski, 2006).

- Mystery shoppers: Recruit ‘undercover’ trained volunteers to evaluate your event and report experiences (e.g. Benjes-Small and Kocevar-Weidinger, 2011).

- Observations during the event: Note how participants move through the event and how they interface and interact with the event’s content (e.g. Bedwell and Banks, 2013).

- Self-addressed postcard surveys: Use a self-addressed postcard to deliver a short survey.

- Vox pops: Document participants’ thoughts and feelings via short audio or video recordings.

Table 1. Types of data generated by various assessment strategies.

Another technique, not to be overlooked, is a self-reflection. Char Booth (2011) outlines three simple questions in her book related to teaching and instructional design that can easily be applied to outreach activities and used in conjunction with other assessment methods. The questions are:

- What was positive about the interaction? What went well?

- What was negative about the interaction? What went wrong?

- Describe one thing you would like to improve or follow up on.

It is easy to get into ruts with programming and advocacy. If the event was “successful” the last time, it is tempting to just repeat the same actions. If it did not go well, there may be an opportunity to change the event and make it better. By immediately following an activity with the three question reflection exercise, you have a starting point for expansion or improvement. Sometimes it takes persistence and/or adaptation for outreach to take hold with an audience.

Hypothetical Scenarios

Using the themes and assessment methods above, we have outlined a few hypothetical examples to help illustrate how the various techniques can be used and to help you expand your repertoire of evaluation strategies. Each example describes the outreach activity, along with the type of library and staff involved, and identifies discrete goals that influenced the outreach activity and assessment strategy. Each scenario outlines the time, budget, manpower investment, type of data to collect, and possible limitations. Not every assessment strategy that could be employed in each example was discussed; rather, we have highlighted a few.

We chose these examples to address the following questions:

- What is the event supposed to accomplish?

- How do we know if the event was successful?

- What data can be gathered to demonstrate if the goals were accomplished?

- What is the best way to gather the data?

As these examples are hypothetical, we have not aligned these goals to larger organizational goals, but in a real-world scenario, that is another question that should be addressed.

Scenario #1: Whole Person Outreach

A medium-sized academic library is collaborating with their local health and wellness center to bring in a team of five animal handlers. This event will take place over the course of four hours midday during finals week inside the library in a reserved space. Two library staff will be in attendance; one person is designated to support the animal handlers while the other interacts with attendees. Based on previous events, 150 students are expected to attend. The budget for the event includes parking passes for the animal handlers, treats for the animals, signage, and fees associated with the coordinating organization.

Goal 1: To reduce student anxiety and stress during finals after participating in the pet therapy activity. Students participating will demonstrate a reduction of three points on a 10-point scale between a pre/post survey.

Assessment strategy:

- Face to face audience survey: Recruit a sample of 20 participants to complete a pre/post survey to measure their stress/anxiety levels.

- Time: Moderate. It will take a fair amount of time to develop good survey questions. There are “free” easy to use tools that can be employed via paper or online (e.g. Google Forms).

- Manpower: Moderate. Multiple people should be involved in developing questions and testing the instrument. Depending on staff knowledge, you may need to seek assistance with analysis of the data.

- Data: This survey will ask participants to rank their stress on a numerical scale; therefore, it will be quantitative data. If participants write in open responses, you will have to code qualitative data to pull out themes and make meaning of the feedback.

- Limitation: Students are busy, and may not have time to complete a pre/post survey. This will require tracking participants to ensure they complete the post survey.

Goal 2: To illustrate engagement with the mental health/wellness activity at the library. At least 150 students will attend and 50% of these attendees will spend five minutes with the animal teams.

Assessment strategy:

- Head counts and observations during the event: One staff member will observe for 15 minutes at the top of the hour throughout the course of the event. Data will be collected on: How many people attended? How long are people staying during the event? How many people walked away or rushed past? How many people asked questions?

- Time: Moderate. Since we will only be observing for a portion of the event, this limits the time involved, but the data will also have to be compiled and analyzed.

- Manpower: Minimal. Only one staff member needs to be involved based on this sample size.

- Data: Quantitative since we will be measuring the number of people doing specific activities, and length of time. We would only have qualitative data to analyze if we decide to record any comments made during the event.

- Limitations: The data is limited to simple numbers and you do not know people’s reasons for dwelling at the station or walking away. You must be careful to choose appropriate behaviors to observe and to not attribute false assumptions to the behaviors (e.g. if a person rushes away, they must be scared of animals). Combining this data with a qualitative strategy would answer the “why” behind people’s behaviors.

Scenario #2: Large-Scale, Multi-Pronged Themed Events and Programming

The library of a liberal arts college is hosting a first-year orientation event for the incoming class of 500 students. This event will be one day, over the course of six hours, with multiple activities available, such as meeting with subject librarians, hands-on time with special collection specimens, a photo booth, and a trivia contest. Students will also be able to learn about library resources and services through a scavenger hunt throughout the library. Since this event is so large, it involves participation from all library workers (approximately 30 people). The budget for this event is moderate: approximately $2,000 for library swag items (e.g. pencils, water bottles), marketing, and food to entice the students to attend.

Goal 1: To introduce students to library resources and services by having at least 30% of the first-year class participate in the library orientation.

Assessment strategy:

- Head counts: Counting all the students that come into the library to attend the event via gate counts before/after the event. This will measure what percentage of the incoming first-year students participated in the event, since it is optional.

- Time: Minimal. Barely any time involved since this will be automated.

- Manpower: Minimal. One person will check gate counts.

- Data: Quantitative since we will only be measuring who attended the event.

- Limitations: Strict head counts do not measure engagement, how long they stayed, or what information they retained. Also, if a participant stands in the way of the sensor, or a large group enters, gate count numbers may be inaccurate.

Goal 2: To increase undergraduate student engagement with the library.

Assessment strategies:

- Observations during the event: There will be two people assigned to each activity station; one will record general observations throughout the event while the other engages with the students. Data will be collected on: How many people participated in the activity? What questions did they ask? What comments did they make? Did they ask follow-up questions about library services?

- Time: Intensive. There are numerous stations and the data will have to be compiled and analyzed.

- Manpower: Extensive. In this case, 15 people will collect data.

- Data: Qualitative and quantitative since we will be measuring the number of people doing specific activities and questions and comments made.

- Limitations: It is important to not make assumptions about people’s motivations and experiences. You must be careful of the conclusions that you draw from observations alone. However, evaluating the data behind what questions were asked or what comments were made will provide richer information.

- Compiling social media comments and documentation: Gathering information about the event (including photos) via Twitter, Facebook, Instagram, and student newspaper sources related to the event by searching on the library’s name and designated event hashtag.

- Time: Moderate. Staff will have to actively seek out this information, as hashtags are not consistently used.

- Manpower: Moderate. One person will gather social media comments.

- Data: Qualitative since text and images from posts and articles will be gathered and coded based on participants’ impressions.

- Limitations: There are questions of access; if comments are not public, you cannot find them. In addition, if participants post on alternate platforms (e.g. Snapchat, YikYak) that you do not check, they will not be discovered.

Goal 3: Students will become aware of at least two library resources and services that can help them with their upcoming research projects.

Assessment strategies:

- Vox pops: Using a video recorder, willing participants will be asked what library resources and services were new to them, and how they might use them over the coming year. This will take place in an area offset from the main library doors, so comments can be captured before people leave the event.

- Time: Intensive. It will take a fair amount of to set-up equipment, find and interview willing subjects, and process and edit video afterwards. There will also be a substantial amount of time involved in analyzing the content of the interviews.

- Manpower: Moderate. One to two people. This activity may require assistance with lighting, recording, interviewing and recruiting subjects, and processing video.

- Data: Qualitative since we will only be capturing people’s comments and reactions.

- Limitations: This measures short-term awareness of resources just mentioned. This does not measure willingness to use such resources during point-of-need.

- Focus groups: A week after the orientation event, gather a sample of ten first-year students and break them into two focus groups, lasting 45 minutes. Students will be asked: what library services do they remember, what services have they used or do they plan to use, and what services would help them in their research. (Depending on the time allotted for the focus group, you could prepare questions to address multiple goals; we will not do that in this example). Students will receive a free pizza lunch for participation.

- Time: Intensive. Recruiting volunteers, creating good questions to ask, observing and recording the focus groups, and coding responses will all take a lot of time.

- Manpower: Moderate. Two people minimum will be needed to run the focus group and analyze data.

- Data: Qualitative since we will be gathering people’s comments.

- Limitations: Funds to provide lunch to participants might not be feasible for all libraries. Further, although focus groups generate rich, qualitative data, the sessions may need to be audio recorded and transcribed (which may cost money).

Goal 4: Determine if the layout of activities are efficient, and that high quality customer service is provided by library staff.

Assessment strategy:

- Mystery shopper: Recruit a few (3-4) upperclass students who are not heavy library users (so they will be unknown to library staff) and have them go through the event as a participant while recording their observations. They will use a checklist and observation form that asks questions about each activity, such as: Did the library staff greet you at the activity? Were there bottlenecks at the activity, and if so, where? Where could signage be used to improve traffic flow? Students serving as mystery shoppers will receive a bookstore giftcard for their time.

- Time: Intensive; It will take a lot of time to recruit and train the volunteers, create a checklist or form to record observations, debrief after the event, and code responses.

- Manpower: Extensive. It will involve library staff for training, debriefing, coding and analyzing the data and student volunteers to carry out the activity.

- Data: Both qualitative and quantitative (yes/no) depending on the feedback form for the student volunteers.

- Limitations: Funds to provide gift certificates to evaluators might not be feasible for all libraries. Recruitment of mystery shoppers that are unfamiliar with the library’s orientation activities may be difficult, especially since recruitment will be prior to the semester beginning.

- Focus groups: A week after the orientation event, gather a sample of ten first-year students and break them into two focus groups. During the debrief, students will be asked: which activities were most engaging and/or fun, what library services do they remember, and suggestions for improvement of the event. Students will receive a bookstore gift card for participation.

- Time: Intensive. Recruiting volunteers, creating good questions to ask, observing and recording the focus groups, and coding responses will all take a lot of time.

- Manpower: Moderate. Two people minimum will be needed to run the focus group and analyze data.

- Data: Focus groups generate rich, qualitative data.

- Limitations: Funds to provide gift certificates to participants might not be feasible for all libraries. The focus group sessions may need to be audio recorded and transcribed (which may cost money).

Scenario #3: Community-organization Outreach

A medium-sized public library is putting on a free two-hour workshop for adults to learn how to make and bind a book. Library materials related to the topic will be displayed. The library will be partnering with a local book arts organization to put on the event. Funding for the workshop is part of a larger community engagement grant. The library hopes to draw fifteen citizens for this hands-on activity.

Goal 1: To have patrons use or circulate three items related to bookbinding and paper arts from the library’s collection during, or immediately following, the event.

Assessment strategy:

- Circulation/use counts: Determine how many items were removed from the selection displayed at the event.

- Time: Minimal. Selecting and creating an informal book display will require very little time.

- Manpower: Minimal. Only one person will be needed to count remaining items from the display or look at circulation statistics.

- Data: Quantitative since we will only be measuring materials used.

- Limitations: This does not gather what participants learned or how engaged they were with the event.

Goal 2: To develop a relationship with the book arts organization and co-create future programming.

Assessment strategies:

- Mini-interview: Interview the activity instructor from the arts organization following the event and ask them how they felt the event went, how the event could be improved, and if they believe that the partnership coalesces with their organization’s mission.

- Time: Minimal. Since we will only be measuring the opinion of one person, preparation (generating questions) and analyzing and coding the results will be fairly simple and straightforward.

- Manpower: Minimal. Only one staff person will interview the instructor.

- Data: Qualitative, as the questions will be open-ended.

- Limitation: This assesses the opinion of the instructor, which might not be the opinion of the administration of the organization, which directs funds and time allotment.

- White board comments: Ask participants: Which of the following activities would you be interested in (pop-up card class, artist’s book talk, papermaking class)? Have participants indicate interest with a checkmark. This will gather data on what kinds of programs could be created as a follow-up to the book-binding workshop.

- Time: Minimal. No time at all to set up. A count-based white board survey will require little preparation and post-collection time.

- Manpower: Minimal. One person will be able to post and record survey responses.

- Data: Quantitative since we will be using checkmarks to indicate preferences.

- Limitations: Questions must be short and require simple responses. If you use white boards at a large events, someone will need to save data (such as by taking a photo) in order to gather new responses.

Goal 3: To provide an engaging event where 75% of attendees rate the activity satisfactory or higher.

Assessment strategy:

- Tear-off Postcard: Give out a self-addressed stamped tear-off postcard to workshop participants at the conclusion of the event. Postcards will feature one side with dual marketing of the library and book arts organization while the tear-off side will have a brief survey. We want to use postcards as they will feature promotional materials from both the arts organization and the library, along with the survey. Survey questions include: “Rate your satisfaction with the workshop (from extremely dissatisfied to extremely satisfied, on a 5 point scale)”, “Rate the length of the workshop (from too long to too short, on a 3 point scale)”, and “What suggestions do you have for improvement?”

- Time: Moderate. Since this is a partnership, final approval will be needed from both organizations, meaning advanced planning is required.

- Manpower: Moderate. Someone will need to design the postcard, develop survey questions for the mailing, and compile and distribute data to both organizations.

- Data: Quantitative and qualitative, as two questions will have numerical responses and one will be open-ended.

- Limitations: Postcards may not be suitable depending on the survey audience. It costs money to print and provide postage for the postcards. Finally, unless people complete these on the spot, they may not be returned.

A Survey and A Call to Action

We hope that the introduction to various assessment techniques drives people toward new ways of thinking about their outreach work. We know, based on the literature, that assessment is often an afterthought. However, we suspected this may have to do with people’s comfort level with the various assessment strategies. To test our suspicion, we conducted an informal online survey of librarians about their experience with outreach activities and various assessment methods. The survey was first distributed in conjunction with a poster presentation that we gave at the 2015 Minnesota Library Association annual conference. The survey asked respondents: what kinds of outreach they have done, what kinds of outreach they would like to attempt, how they have assessed their outreach, how comfortable they would be administering suggested assessment methods, and what kind of library they work at.

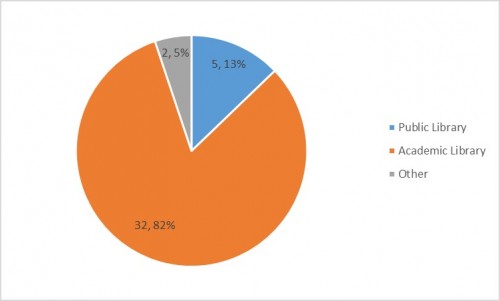

We had 39 responses to our survey, primarily from those who worked in academic libraries (see Figure 1).

Figure 1. Type of institutions where survey respondents work, measured by number who responded and percentage of the total.

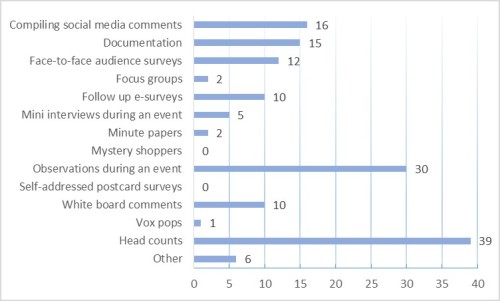

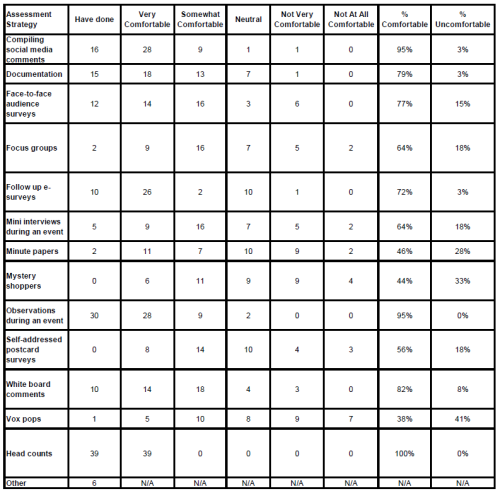

Survey participants illustrated a range of experience with the various assessment methods that we listed (see Figure 2). The only assessment method listed that was used by everyone was head counts. However, the majority of people have also tried taking observations during the event (76.9%). No other assessment method was used by the majority of survey respondents. The most highly used methods were, in descending order: compiling social media comments (41%), documentation (38.5%), face-to-face audience surveys (30.8%), follow up e-surveys (25.6%), and white board comments (25.6%). Focus groups, minute papers, and vox pops were used very minimally. Only two people had used focus groups and minute papers, and only one person had used vox pops. No one indicated that they had used either mystery shoppers or self-addressed postcard surveys. Unfortunately, we do not know how frequently these various methods have been employed, as that question was not asked. A positive response could have been triggered from an event that happened several years ago.

Figure 2. Number of survey participants who have utilized different methods for assessing outreach activities (out of 39 total responses).

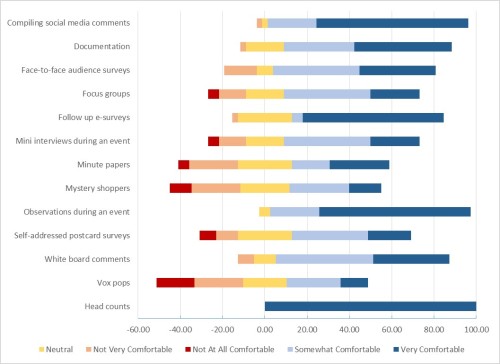

Survey participants ranged in their level of comfort with the various assessment methods. Figure 3 compiles our data on participants’ level of comfort, ranging from “Not At All Comfortable” to Very Comfortable”. Everyone stated that they were very comfortable using head counts. The vast majority of respondents were comfortable with compiling social media comments and observations (95% each).

Figure 3. Survey participants level of comfort with using various methods for assessing outreach activities. Values are measured as percentages, with positive percentages indicating comfort and negative percentages indicating discomfort.

Table 2 compares participants use of each of these assessment methods to their comfort levels. For the purpose of this table, the “Somewhat Comfortable” and “Very Comfortable” responses were combined to define the percent of people who were “Comfortable” and the “Not Very Comfortable” and “Not At All Comfortable” responses were combined to define the “% Uncomfortable.” Interestingly, not everyone who indicated a high level of comfort has employed these methods. For example, only 16 of 37 people who are comfortable compiling social media comments have ever done so and only 30 of 37 people who are comfortable conducting observations have ever done so. The data clearly show that people are somewhat comfortable with a majority of the methods listed. A majority of people (over 50%) claim to be very or somewhat comfortable with 10 of the 13 methods. Only 3 methods, minute papers, mystery shoppers and vox pops, had relatively high levels of discomfort among participants (28%, 33%, 41%, respectfully). In these cases, very few people stated that they had used these assessment methods, however. We fully realize that the results could have been different if we had more representation from public and school library staff.

Table 2. Survey participants use of, and level of comfort with using, various methods for assessing outreach activities. Percentages for “% Comfortable” were calculated by combining “Very Comfortable” and “Somewhat Comfortable” values. Percentages for “% Uncomfortable” were calculated by combining “Not Very Comfortable” and “Not At All Comfortable”.

Table 2

In general, this illustrated to us that even if people are aware and know about these methods, for some reason they are not putting them to use when assessing outreach events. We had a suspicion, based on our experiences, conversations, and the library literature, that this was the case. This brief survey confirmed our theories. However, the survey did not delve into any of the reasons why people are not using a variety of assessment methods and we can only guess what their reasons may include: time, budget constraints, lack of education, and administrative support. We have illustrated that many of the methods do not require much time or money as long as the assessment planning goes hand-in-hand with setting event goals and activity planning. Lack of staff education is a real concern and those needing information about quantitative and qualitative methods may need to seek out classes, workshops, and professional development outside of their institution. That being said, there has been an increase in freely available or low cost web courses (e.g. Coursera’s “Methods and Statistics in Social Sciences Specialization”) and there are numerous books and articles published on the topic (National Library of Medicine’s “Planning and Evaluating Health Information Outreach Projects”). Research methods have not traditionally been a required course in library schools, and therefore, it is unreasonable to expect that library workers have extensive experience in the area (O’Connor and Park, 2002; Powell et al., 2002; Partridge et al., 2014). However, due to external and internal pressure to show value in our libraries and activities, it is of utmost importance that we make assessment a priority.

Conclusion

Libraries have determined the need to participate in outreach activities for numerous reasons including: to connect with current and potential users, to stay relevant, to build goodwill, and to gain support. Demonstrating the value of libraries has generated lively discussion in the last few years, much of which is focused on collection metrics and head counts, such as number of visitors to the library, number of items checked out, number of reference questions asked, or number of workshop attendees. Although the library literature is filled with examples of various kinds of outreach activities and how libraries are connecting with communities, there is a distinct lack of discussion about how outreach is assessed. Assessment strategies are needed to demonstrate a return on investment for our constituents, and to improve our marketing, public relations, advocacy and ultimately library patronship.

As we have illustrated, there are a variety of qualitative and quantitative methods available that can be used to assess virtually any kind of outreach. The technique(s) that you choose will depend on various factors, including the goals associated with the programming, target audience for the activity, and the type of outreach. There are pros and cons to each assessment method, with some involving a larger budget, more staffing and time, or familiarity with research methods. However, it is not always necessary to use the most complicated, expensive, and time-intensive method to collect valuable data. We cannot leave assessment to library administrators and those with assessment in their job titles. There are many opportunities to test assessment techniques and gather data in our daily work. We want to encourage those in libraries who are doing outreach work to incorporate goal-driven assessment when sharing the results of their work. Not only does it help illustrate impact, it helps others think critically about their own work and determine which kinds of outreach are most appropriate for their institutions and communities. By only focusing on head counts we undermine our ability to accurately understand the qualitative and quantitative relevance of the assessments made when evaluating library outreach objectives and goals.

Acknowledgements

We would like to thank Erin Dorney, our internal editor, for shepherding us throughout the writing process, and providing good feedback. In addition, our gratitude goes to Adrienne Lai, our external reviewer, who posed excellent questions and comments to consider that strengthened this article. We would also like to thank our colleagues at the University of Minnesota for providing feedback on our proposed outreach categories.

References

Audiences London. (2012). Researching audiences at outdoor events and festivals. London: Audiences London. Retrieved from: https://capacitycanada.ca/wp-content/uploads/2014/09/Researching-Audiences-at-Outdoor-Eve nts.pdf

Annala, G. (2015). Abandoning “Outreach.” Retrieved March 9, 2016, from http://www.academiclibrarymarketing.com/blog/abandoning-outreach

Malenfant, K. J., & Hinchliffe, L. J. (2013). Assessment in action: Academic libraries and student success. Retrieved January 14, 2016, from http://www.ala.org/acrl/AiA#summaries

Bedwell, L. L., & Banks, C. c. (2013). Seeing through the eyes of students: Participant observation in an academic library. Partnership: The Canadian Journal Of Library & Information Practice & Research, 8(1), 1-17.

Benjes-Small, C., & Kocevar-Weidinger, E. (2011). Secrets to successful mystery shopping: A case study. Coll. Res. Libr. News, 72(5), 274–287. Retrieved from http://crln.acrl.org/content/72/5/274.full

Bishoff, C., Farrell, S., & Neeser, A. (2015). Outreach, collaboration, collegiality: evolving approaches to library video game services. Journal of Library Innovation. Retrieved from http://www.libraryinnovation.org/article/view/412

Bonnand, S., & Hanson, M. A. (2015). Innovative solutions for building community in academic libraries. Hershey : Information Science Reference.

Booth, C. (2011). Reflective teaching, effective learning: Instructional literacy for library educators. American Library Association.

Boss, K., Angell, K., & Tewell, E. (2015). The amazing library race: Tracking student engagement and learning comprehension in library orientations. Journal of Information Literacy, 9(1), p4–14.

Brantley, P. (2015). Summer reading in the digital age. Publishers Weekly, 262(15), 24-25.

Bureau of Business and Economic Research, Labovitz School of Business and Economics, U. of M. D. (2011). Minnesota public libraries’ return on investment. Duluth. Retrieved from http://melsa.org/melsa/assets/File/Library_final.pdf

Carlson, J., Nelson, M. S., Johnston, L. R., & Koshoffer, A. (2015). Developing data literacy programs: Working with faculty, graduate students and undergraduates. Bulletin of the American Society for Information Science and Technology, 41(6), 14–17. http://doi.org/10.1002/bult.2015.1720410608

Carnegie Mellon University Center for Economic Development. (2006). Carnegie Library of Pittsburgh. Pittsburgh. Retrieved from http://www.clpgh.org/about/economicimpact/CLPCommunityImpactFinalReport.pdf

Chambers, A. (2015). Fairfield Bay library celebrates National Library Week. Arkansas Libraries, 72(2), 19.

Choinski, E., & Emanuel, M. (2006). The one‐minute paper and the one‐hour class. Reference Services Review, 34(1), 148–155. http://doi.org/10.1108/00907320610648824

Cilauro, R. (2015b). Community building through a public library Minecraft Gaming Day. The Australian Library Journal, 64(2), 87–93. http://doi.org/10.1080/00049670.2015.1015209

Davis, A., Rice, C., Spagnolo, D., Struck, J., & Bull, S. (2015). Exploring pop-up libraries in practice. The Australian Library Journal, 64(2), 94–104. http://doi.org/10.1080/00049670.2015.1011383

Dynia, J. D., Piasta, S. P., & Justice, L. J. (2015). Impact of library-based summer reading Clubs on primary-grade children’s literacy activities and achievement. Library Quarterly, 85(4), 386-405.

Engeszer, R. J., Olmstadt, W., Daley, J., Norfolk, M., Krekeler, K., Rogers, M., … & McDonald, B. (2016). Evolution of an academic–public library partnership. Journal of the Medical Library Association: JMLA, 104(1), 62.

Epps, L., & Watson, K. (2014). EMERGENCY! How queens library came to patrons’ rescue after Hurricane Sandy. Computers In Libraries, 34(10), 3-30.

Evans, C. (2013). One book, one school, one great impact!. Library Media Connection, 32(1), 18-19.

Ford, E. (2009). Outreach is (un)Dead. In the Library with the Lead Pipe. Retrieved from https://www.inthelibrarywiththeleadpipe.org/2009/outreach-is-undead/

Harmon, C., & Messina, M. (2013). Using social media in libraries: Best practices. Rowman & Littlefield.

Hayes-Bohanan, P. (2011). Building community partnerships in a common reading program. Journal Of Library Innovation, 2(2), 56-61.

Henry, C. L., Vardeman, K. K., & Syma, C. K. (2012). Reaching out: Connecting students to their personal librarian. Reference Services Review, 40(3), 396–407. http://doi.org/10.1108/00907321211254661

Hinchliffe, L. J., & Wong, M. A. (2010). From services-centered to student-centered: A “wellness wheel” approach to developing the library as an integrative learning commons. College & Undergraduate Libraries, 17(2-3), 213-224.

Hubbard, M. A. (2009). Banned Books Week and the Freedom of the Press: Using a research collection for campus outreach. Retreived March 10, 2016 from: http://opensiuc.lib.siu.edu/cgi/viewcontent.cgi?article=1038&context=morris_articles

Hutzel, M. M., & Black, J. A. (2014). We had a “Maker Festival” and so can you!. Virginia Libraries, 60(2), 9-14.

Inklebarger, T. (2014). Dog therapy 101. (cover story). American Libraries, 45(11/12), 30-33. Retreived from: http://americanlibrariesmagazine.org/2014/12/22/dog-therapy-101/

Jalongo, M. R., & McDevitt, T. (2015). Therapy dogs in academic libraries: A way to foster student engagement and mitigate self-reported stress during finals. Public Services Quarterly, 11(4), 254–269. http://doi.org/10.1080/15228959.2015.1084904

Johnson, M., Buhler, A. G., & Hillman, C. (2010). The library is undead: Information seeking during the zombie apocalypse. Journal of Library Innovation, 1(2), 29-43. Retreived from: http://www.libraryinnovation.org/article/viewArticle/64

Lotts, M. (2015). Implementing a culture of creativity: Pop-up making spaces and participating events in academic libraries. Coll. Res. Libr. News, 76(2), 72–75. Retrieved from http://crln.acrl.org/content/76/2/72.full?sid=8ac6cf59-e81b-4d25-8cc8-294973657490

Marcotte, A. (2015). Coloring book clubs cross the line into libraries. American Libraries, 46(11/12), 18-19.

Meyers-Martin, C., & Borchard, L. (2015). The finals stretch: exams week library outreach surveyed. Reference Services Review, 43(4), 510–532. http://doi.org/10.1108/RSR-03-2015-0019

Mezick, E. (2007). Return on investment: Libraries and student retention. The Journal of Academic Librarianship. Retrieved from http://www.sciencedirect.com/science/article/pii/S0099133307001243

Murphy, J., & Meyer, H. (2013). Best (and Not So Best) Practices in Social Media. ILA Reporter, 31(6), 12-15.

Noe, N. W., & Boosinger, M. L. (2010). Snacks in the Stacks: One Event—Multiple Opportunities. Library Leadership & Management, 24(2), p87–90.

Nunn, B., & Ruane, E. (2012). Marketing Gets Personal: Promoting Reference Staff to Reach Users. Journal of Library Administration, 52(6-7), 571–580. http://doi.org/10.1080/01930826.2012.707955

Oakleaf, M. (2010). The Value of Academic Libraries: A Comprehensive Research Review and Report. Association and College and Research Libraries. Chicago, IL: American Library Association. http://www.ala.org/acrl/sites/ala.org.acrl/files/content/issues/value/val_report.pdf

O’Connor, D., & Park, S. (2002). On my mind: Research methods as essential knowledge. American Libraries, 33(1), 50–50.

Partridge, H., Haidn, I., Weech, T., Connaway, L. S., & Seadle, M. (2014). The researcher librarian partnership: Building a culture of research. Library and Information Research, 38(18). Retrieved from http://www.lirgjournal.org.uk/lir/ojs/index.php/lir/article/view/619

Peet, L. (2015). Ferguson Library: A Community’s Refuge. Library Journal, 140(1), 12.

Potter, N. (2012). The library marketing toolkit. Facet Publishing.

Powell, R., Baker, L. M., & Mika, J. (2002). Library and information science practitioners and research. Library & Information Science Research, 24(1), 49-72. doi:10.1016/S0740-8188(01)00104-9

Schneider, T. (2003). Outreach: Why, how and who? Academic libraries and their involvement in the community. The Reference Librarian, 39(82), 199–213. http://doi.org/10.1300/J120v39n82_13

Schwartz, M. (2014). DIY one book at Sacramento PL. Library Journal, 139(4), 30.

Soria, K., Fransen, J., & Nackerud, S. (2013). Library use and undergraduate student outcomes: New evidence for students’ retention and academic success. Portal: Libraries and the Academy. Retrieved from http://conservancy.umn.edu/handle/11299//143312

Steman, T., & Post, P. (2013). History day collaboration: Maximizing resources to serve students. College & Undergraduate Libraries, 20(2), 204–214. http://doi.org/10.1080/10691316.2013.789686

Walden, G. R. (2014). Informing library research with focus groups. Library Management, 35(8/9), 558–564. http://doi.org/10.1108/LM-02-2014-0023

Wilson, V. (2012). Research methods: Interviews. Evidence Based Library & Information Practice, 7(2), 96-98.

Wray, C. C. (2014). Reaching underserved patrons by utilizing community partnerships. Indiana Libraries. 33(2), 73-75. Retrieved from: https://journals.iupui.edu/index.php/IndianaLibraries/article/view/16630/pdf_949

Zitron, L. (2013). Where is this all going: Reflective outreach.

Zettervall, S. (2014). Whole person librarianship. Retrieved March 10, 2016, from https://mlismsw.wordpress.com/2014/03/01/what-is-whole-person-librarianship/

Pingback : SWILA (still) on my mind

Pingback : Links of the Month – May 2016 – Teen Services Underground

Good article! Too many libraries do outreach without thinking of outcomes or assessment strategies. Very useful and practical.

I have been searching for information about qualitative assessment of outreach services with no luck. I am so glad to see this article and its practical application guidelines! Also, I think one reason why librarians use some methods and not others may also be an apprehension to approaching users– maybe because of time during initiatives, lack of past responses or lack of confidence.