Electronic Resources Reviews

Quick Review of Microsoft Academic

Meredith Ayers

Science Librarian

Founders Memorial Library

Northern Illinois University

Dekalb, IL

mayers@niu.edu

Microsoft Academic (MA) (https://academic.microsoft.com/home) claims to be an academic search engine that uses knowledge discovery, machine learning, and semantic inference to help users search for scholarly information in new and better ways. However, this is not the first iteration of Microsoft Academic. Since its debut in 2004 as Microsoft Academic Search (MAS) (Ortega & Aguillo 2014) and its relaunch in 2014 (Harzing 2016), it has been heavily analyzed, tested, and critiqued in the literature. While this review will address some aspects of Microsoft Academic’s history, the focus will be on the product’s current features.

What is available in this database now? The answer is on the homepage next to the search box (Figure 1). Underneath the search box there are two columns. The left column contains a box explaining that the data for the database is available for offline processing and can be retrieved by researchers with a Microsoft Academic Graph (MAG) subscription. The APIs are hosted by Microsoft Academic Knowledge Exploration Services (MAKES) and are available on Azure for MAKES subscribers. If users want their publications to appear in Microsoft Academic, the publications must be discoverable and indexed by Microsoft’s search engine Bing. Under that box is a list of the top 15 Authors, Institutions, Journals, and Conferences for a subject discipline; the subject that displays changes with each visit to the page (Figure 1). In the right column, there are links to sample searches and upcoming conferences. Below the columns is a blog banner where the latest blog post can be viewed and older ones can be accessed.

Figure 1. Screenshot taken on November 11, 2020 (Microsoft Academic 2020).

Information about Microsoft Academic can be found in the FAQ; the link can be found at the bottom of the homepage. Frequently asked questions are available in the following categories: Help, Rankings & Analytics, Content, and API (Microsoft Academic FAQ 2020). Microsoft Academic recommends that researchers pay attention to suggestions offered by the database in order to help them narrow down results quickly. According to the FAQ, MA is a semantic search engine that will return results related to the initial search query. It uses natural language processing to understand the content in each document so that it is not just performing string searches for keywords (Microsoft Academic FAQ 2020). Figure 2 shows the main entities acknowledged by Microsoft Academic.

Figure 2. Main entities in Microsoft Academic (MA FAQ 2020).

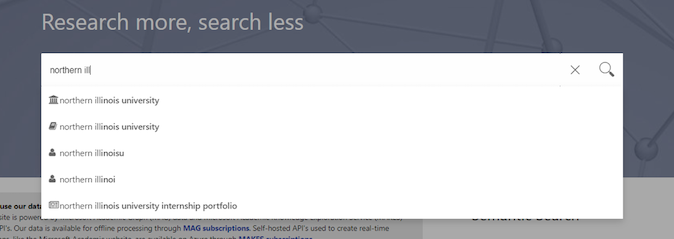

A search for the author’s institution yielded the suggestions in Figure 3. The institution suggestion was selected.

Figure 3. Search suggestions form MA (MA 2020).

The search retrieved 20,771 results with the option to filter by time, top topics, publication types, top authors, top journals, top institutions (NIU in top spot), top conferences, top MeSH descriptors and top MeSH qualifiers, all listed in the column on the left-hand side of the screen. The results are listed in the middle column and the right-hand column lists the institution with a brief description and top topics (Figure 4). An example of the results column is shown in Figure 5.

Figure 4. Screenshot of the third column on the result page (MA 2020).

Figure 5. View of results from the middle column (MA 2020).

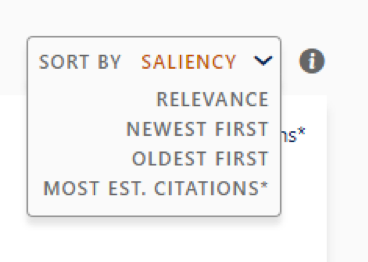

Searchers can use the stars in the middle of Figure 5 to rate the accuracy of the results retrieved from the database, but they must be logged in to operate the view function, which is between the result accuracy and sort by options (Figure 6).

Figure 6. Saliency sorting option (MA 2020).

A sample record is shown in Figure 7. It shows the basic information title, citation, authors, abstracts, number of references and citations. It also gives links to PDFs of the articles and websites where the article is indexed. Below the links to related topics, there are links to Follow, Claim, Cite, Add to a reading list, or Share the record. Users need to sign in to use the Follow, Claim, or Add an article to a reading list functions. The user is given the option to sign in using Facebook, Google, LinkedIn, Microsoft, or Twitter. The Cite function gives options for citing in MLA, APA, or Chicago style. The citation can be copied to a clipboard or downloaded to Microsoft Word, BibTeX, or RIS. The Share function allows the user to copy a short link or share via Facebook, Twitter, or LinkedIn. Below this are a set of three tabs listing references for the article, articles which cite the article, and related articles. The results in these tabs can be filtered and sorted just like the search results.

Figure 7: Record in Microsoft Academic (MA 2020).

Many studies in the past have noted or commented on incorrect years of publication assigned to some publications (Harzing 2016; Hug et. al 2017; Hug & Brändle 2017; Moussa 2019). Microsoft Academic addresses this issue in its FAQ, stating the discrepancy is due to the fact that it collects the first date it finds attached to the publication. New publications are added to an author’s profile page after the machine learning system finds proof of high confidence that it belongs there. Characteristics of high confidence are that the article is listed on the author’s online CV and is similar to or has a lot in common with the author’s prior publications. These and other issues that have been brought up in previous publications are now addressed in the FAQ for Microsoft Academic.

Numerous articles have been written comparing the search capabilities and content of Microsoft Academic’s earlier iterations to Scopus, Web of Science and Google Scholar. The author will leave to others the in-depth analysis to determine if Microsoft Academic still outperforms Scopus and Web of Science (Harzing 2016; Harzing & Alakangas 2017a; Harzing & Alakangas 2017b; Hug & Brändle 2017) and underperforms or is comparable to Google Scholar (Harzing 2016; Harzing & Alakangas 2017a; Harzing & Alakangas 2017b; Hug et al. 2017; Moussa 2019; Orduña-Malea et al. 2014; Ortega & Aguillo 2014). While it remains to be seen if the machine learning semantic search engine will live up to expectations, hopefully this brief overview will be of use to anyone exploring the database for the first time or visiting again after a hiatus.

References

Harzing, A. 2016. Microsoft Academic (Search): A phoenix arisen from the ashes? Scientometrics. 108:1637-1647. DOI: 10.1007/s11192-016-2026-y.

Harzing, A. & Alakangas, S. 2017a. Microsoft Academic: Is the phoenix getting wings. Scientometrics. 110:371-383. DOI: 10.1007/s11192-016-2185-x.

Harzing, A. & Alakangas, S. 2017b. Microsoft Academic is one year old: The phoenix is ready to leave the nest. Scientometrics. 112:1887-1894. DOI: 10.1007/s11192-017-2454-3.

Hug, S.E. & Brändle, M.P. 2017. The coverage of Microsoft Academic: Analyzing the publication output of a university. Scientometrics. 113:1151-1571. DOI: 10.1007/s11192-017-2535-3.

Hug, S.E., Ochsner, M. & Brändle, M.P. 2017. Citation analysis with Microsoft Academic. Scientometrics. 111:371-378. DOI: 10.1007/s11192-017-2247-8.

Microsoft Academic [Internet]. 2020. [accessed 2020 Nov 11]. Available from https://academic.microsoft.com/home.

Microsoft Academic FAQ [Internet]. 2020. [accessed 2020 Nov 11]. Available from https://academic.microsoft.com/faq.

Moussa, S. 2019. Is Microsoft Academic a viable citation source for ranking marketing journals? Aslib Journal of Information Management. 71(5):569-582. DOI: 10.1108/AJIM-03-2019-0070.

Orduña-Malea, E., Martín-Martín, A., Ayllon, J. M. & López-Cózar, E.D. 2014. The silent fading of an academic search engine: The case of Microsoft Academic Search. Online Information Review. 38(7):936-953. DOI: 10.1108/OIR-07-2014-0169.

Ortega, J.L. & Aguillo, I.F. 2014. Microsoft Academic Search and Google Scholar citations: Comparative analysis of author profiles. Journal of the Association for Information Science and Technology. 65(6):1149-1156. DOI: 10.1002/asi.23036.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Issues in Science and Technology Librarianship No.96, Fall 2020. DOI: 10.29173/istl2582