Coincidence?

This post was written by Hal Hinderliter, as part of Practitioner Perspectives: Developing, Adapting, and Contextualizing the #DLFteach Toolkit, a blog series from DLF’s Digital Library Pedagogy group highlighting the experiences of digital librarians and archivists who utilize the #DLFteach Toolkit and are new to teaching and/or digital tools.

This post was written by Hal Hinderliter, as part of Practitioner Perspectives: Developing, Adapting, and Contextualizing the #DLFteach Toolkit, a blog series from DLF’s Digital Library Pedagogy group highlighting the experiences of digital librarians and archivists who utilize the #DLFteach Toolkit and are new to teaching and/or digital tools.

The Digital Library Pedagogy working group, also known as #DLFteach, is a grassroots community of practice, empowering digital library practitioners to see themselves as teachers and equip teaching librarians to engage learners in how digital library technologies shape our knowledge infrastructure. The group is open to anyone interested in learning about or collaborating on digital library pedagogy. Join our Google Group to get involved.

For this blog post, I’ve opted to provide some background information on the topic of my #DLFteach Toolkit entry: the EPUB (not an acronym) format, used for books and other documents. Librarians, instructors, instructional designers and anyone else who needs to select file formats for content distribution should be aware of what EPUB has to offer!

Electronic books: the fight over formats

The production and circulation of books, journals, and other long-form texts has been radically impacted by the growth of computer-mediated communication. Electronic books (“e-books”) first emerged near a half-century ago as text-only ASCII files, but are now widely available in a multitude of different file formats. Most notably, three competing options have been competing for market dominance: PDF files, KF8 files (for Amazon’s Kindle devices), and the open-source EPUB format. The popularity of handheld Kindle devices has created a devoted fan base for KF8 e-books, but in academia the ubiquitous PDF file remains the most common way to distribute self-contained digital documents. In contrast to these options, a growing movement is urging that libraries and schools eschew Kindles and abandon their reliance on PDFs in favor of the EPUB electronic book format.

The EPUB file format preserves documents as self-contained packages that manage navigation and presentation separately from the document’s reflowable content, allowing users to alter font sizes, typefaces, and color schemes to suit their individual preferences. E-books saved in the EPUB format are compatible with Apple’s iPads and iPhones as well as Sony’s Reader, Barnes & Nobles Nook, and an expansive selection of software applications for desktop, laptop, and tablet computers. Increasingly, that list includes screen reader software such as Voice Dream and VitalSource Bookshelf, meaning that a single file format – EPUB 3 – can be readily accessed by both sighted and visually impaired audiences.

The lineage of EPUB can be traced back to the Digital Audio-based Information System (DAISY), developed in 1994 under the direction of the Swedish Library of Talking Books and Braille. Today, EPUB is an open-source standard that is managed by the International Digital Publishing Forum, part of the W3C. In contrast to the proprietary origins of both PDF and KF8 e-books, modifications to the open EPUB standard have always been subject to public input and debate.

Accessibility in Academia: EPUB versus PDF

Proponents of universal design principles recommend the use of documents that are fully accessible to everyone, including users of assistive technologies, e.g., screen readers and refreshable braille displays. The DTBook format, a precursor to EPUB, was specifically referenced by Rose et al. (2006) in their initial delineation of Universal Design for Learning (UDL) as part of UDL’s requirement for multiple means of presentation. At the time, the assumption was that DTBooks would be distributed only to students who needed accessible texts, with either printed copies or PDF files for sighted learners. Today, however, it is no longer necessary to provide multiple formats, since EPUB 3 (the accessibility community’s preferred replacement for DTBooks) can be used with equal efficacy by all types of students.

In contrast, PDF files can range from completely inaccessible to largely accessible, depending on the amount of effort the publisher expended during the remediation process. PDF files generated from word processing programs (e.g., Microsoft Word) are not accessible by default, but instead require additional tweaks that necessitate the use of Adobe’s Acrobat Pro software (the version of Acrobat that retails for $179 per year). Users of assistive technologies have no recourse but to attempt opening a PDF file before often finding that the document lacks structure (needed for navigation), alt tags, metadata, or other crucial features. Even for sighted learners, PDFs downloaded from their university’s online repository will be difficult to view on smartphones, since PDF’s fixed page dimensions will require endless zooming and scrolling to display each column of text at an adequate font size.

The superior accessibility of EPUB has inspired major publishers to establish academic repositories of articles in EPUB format, e.g., ABC-CLIO, ACLS Humanities, EBSCO E-Books, Proquest’s Ebrary, Elsevier’s ScienceDirect, Taylor & Francis. Many digital-only journals offer their editions as EPUBs. For example, Trude Eikebrokk, editor of Professions & Professionalism, investigated the advantages of publishing in the EPUB format as described in this excerpt from the online journal Code{4}lib:

There are two important reasons why we wanted to replace PDF as our primary e-journal format. PDF is a print format. It will never be the best choice for reading on tablets (e.g. iPad) or smartphones, and it is challenging to read PDF files on e-book readers … We wanted to replace or supplement the PDF format with EPUB to better support digital reading. Our second reason for replacing PDF with EPUB was to alleviate accessibility challenges. PDF is a format that can cause many barriers, especially for users of screen readers (synthetic speech or Braille). For example, Excel tables are converted into images, which makes it impossible for screen readers to access the table content. PDF documents might also lack search and navigation support, due to either security restrictions, a lack of coded structure in text formats, or the use of PDF image formats. This can make it difficult for any reader to use the document effectively and impossible for screen reader users. On the other hand, correct use of XHTML markup and CSS style sheets in an EPUB file will result in search and navigation functionalities, support for text-to-speech/braille and speech recognition technologies. Accessibility is therefore an essential aspect of publishing e-journals: we must consider diverse user perspectives and make universal design a part of the publishing process.

The Future of EPUB

A robust community of accessibility activists, publishers, and e-book developers continues to advance the EPUB specification. The update to EPUB3 added synchronized audio narration, embedded video, MathML equations, HTML5 animations, and Javascript-based interactivity to the format’s existing support for metadata, hyperlinks, embedded fonts, text (saved as XHTML files) and illustrations in both Scalable Vector Graphic (SVG) and pixel-based formats. Next up: the recently announced upgrade to EPUB 3.2, which embraces documents created under the 3.0 standard while improving support for Accessible Rich Internet Applications (ARIA) and other forms of rich media. If you’re ready to join this revolution, have a run through the #DLFteach Toolkit’s EPUB MakerSpace lesson plan!

The post The #DLFteach Toolkit: Recommending EPUBs for Accessibility appeared first on DLF.

2021-04-27T13:00:54+00:00 Gayle HangingTogether: Nederlandse ronde tafel sessie over next generation metadata: Denk groter dan NACO en WorldCat http://feedproxy.google.com/~r/Hangingtogetherorg/~3/n-ABc9qABiA/Met dank aan Ellen Hartman, OCLC, voor het vertalen van de oorspronkelijke Engelstalige blogpost.

Op 8 maart 2021 werd een Nederlandse ronde tafel discussie georganiseerd als onderdeel van de OCLC Research Discussieserie over Next Generation metadata.

Bibliothecarissen, met achtergronden in metadata, bibliotheeksystemen, de nationale bibliografie en back-office processen, namen deel aan deze sessie. Hierbij werd een mooie variatie aan academische en erfgoed instellingen in Nederland en België vertegenwoordigd. De deelnemers waren geëngageerd, eerlijk en leverden met hun kennis en inzicht constructieve bijdragen aan een prettige uitwisseling van kennis.

Net als in de andere ronde tafel sessies werden de deelnemers gevraagd om in kaart te helpen brengen wat voor next generation metadata initiatieven er in Nederland en België worden ontplooid. De kaart die daarmee werd gevuld laat zien dat in deze regio een sterke vertegenwoordiging is van bibliografische en erfgoed projecten (zie de linker helft van de matrix). Verschillende next-generation metadata projecten van de Koninklijke Bibliotheek Nederland werden omschreven, zoals:

De Digitale Erfgoed Referentie Architectuur (DERA) is ontwikkeld als onderdeel van een nationale strategie voor digitaal erfgoed in Nederland. Het is een framework voor het beheren en publiceren van erfgoed informatie als linked open data (LOD), op basis van overeengekomen conventies en afspraken. Het van Gogh Worldwide platform is een voorbeeld van de applicatie van DERA, waar metadata gerelateerd aan de kunstwerken van van Gogh, die in bezit zijn van Nederlandse erfgoed instellingen en in privé bezit worden geaggregeerd.

Een noemenswaardig in kaart gebracht initiatief op het gebied van Research Informatie Management (RIM) en Scholarly Communications was de Nederlandse Open Knowledge Base. Een in het afgelopen jaar opgestart initiatief binnen de context van de deal tussen Elsevier en VSNU, NFU en NWO om gezamenlijk open science services te ontwikkelen op basis van RIM systemen, Elsevier databases, analytics oplossingen en de databases van de Nederlandse onderzoeksinstellingen. De Open Knowledge Base zal nieuwe applicaties kunnen voeden met informatie, zoals een dashboard voor het monitoren van de sustainable development goals van de universiteiten. Het uitgangspunt van de Knowledge Base is het significant kunnen verbeteren van de analyse van de impact van research.

Ondanks dat er tijdens de sessie innovatieve projecten in kaart werden gebracht, werd er net als in sommige andere sessies, onduidelijkheid gevoeld over hoe we nu verder door kunnen ontwikkelen. Ook was er sprake van enig ongeduld met de snelheid van de transitie naar next generation metadata. Sommige bibliotheken waren gefrustreerd over het gebrek aan tools binnen de huidige generatie systemen om deze transitie te versnellen. Zoals de integratie van Persistant Identifiers (PID), lokale authorities of links met externe bronnen. Meerdere tools moeten gebruiken voor een workflow voelt als een stap terug in plaats van vooruit.

Buiten praktische belemmeringen werd de discussie vooral gedomineerd door de vraag wat ons tegenhoudt in deze ontwikkeling. Met zoveel bibliografische data die al als LOD gepubliceerd wordt, wat is er dan verder nodig om deze data te linken? Zouden we niet op zoek moeten naar partners om samen een kennis-ecosysteem te ontwikkelen?

Een deelnemer gaf aan dat bibliotheken voorzichtig of terughoudend zijn met de databronnen waarmee ze willen linken. Authority files zijn betrouwbare bronnen, waarvoor er nog geen gelijkwaardige alternatieven bestaan in het zich nog ontwikkelende linked data ecosysteem. Het gebrek aan conventies voor de betrouwbaarheid is misschien een reden waarom bibliotheken misschien wat terughoudend zijn in het aangaan van linked data partnerschappen of terug deinzen voor het vertrouwen op externe data, zelfs van gevestigde bronnen als Wikidata. Want, het linken naar een databron is een indicatie van vertrouwen en een erkenning van de datakwaliteit.

Het gesprek ging vervolgens verder over linked datamodellen. Welke data creëer je zelf? Hoe geef je je data vorm en link je met andere data? Sommige deelnemers gaven aan dat er nog steeds een gebrek aan afspraken en duidelijkheid is over concepten zoals een “werk”. Anderen gaven aan dat het vormgeven van concepten precies is waar linked data om draait en dat meerdere onthologieën naast elkaar kunnen bestaan. In andere woorden, het is misschien niet nodig om de naamgeving in harde standaarden te vatten.

“Er is geen uniek semantisch model. Wanneer je verwijst naar gegevens die al door anderen zijn gedefinieerd, geef je de controle over dat stukje informatie op, en dat kan een mentale barrière zijn tegen het op de juiste manier werken met linked data. Het is veel veiliger om alle data in je eigen silo op te slaan en te beheren. Maar op het moment dat je dat los kunt laten, kan de wereld natuurlijk veel rijker worden dan je in je eentje ooit kunt bereiken.”

Het gesprek ging verder met een discussie over wat we kunnen doen om bibliotheekmedewerkers die catalogiseren te trainen. Een van de deelnemers vond dat het handig zou zijn om te beginnen met ze te leren te denken in linked dataconcepten en om te oefenen met het opbouwen van een knowledge graph en het experimenteren met het bouwen van verschillende structuren. Net als dat een kind dat doet door met LEGO te spelen. De deelnemers waren het erover eens dat we op dit moment nog te weinig kennis hebben van de mogelijkheden en de consequenties van het gebruik van linked data.

“We moeten leren onszelf te zien als uitgevers van metadata, zodat anderen het kunnen vinden – maar we hebben geen idee wie de anderen zijn, we moeten zelfs groter denken dan de NACO van de Library of Congress of WorldCat. We hebben het niet langer over de records die we maken, maar over stukjes records die uniek zijn, want veel komt al van elders. We moeten ons dit realiseren en onszelf afvragen: wat is onze rol in het grotere geheel? Dit is erg moeilijk om te doen!”

De deelnemers gaven aan dat het erg belangrijk was om deze discussie binnen hun bibliotheek op gang te brengen. Maar hoe doe je dat precies? Het is een groot onderwerp en het zou mooi zijn als daar vanuit het management ook aandacht voor is.

Een leidinggevende binnen de deelnemersgroep reageerde hierop en gaf aan:

“Het valt me op dat de hoeveelheid bibliotheken die hier nog echt mee te maken hebben kleiner wordt. (…) [In mijn bibliotheek] produceren we nauwelijks zelf nog metadata. (…) Als we kijken naar wat we zelf nog produceren is dat bijvoorbeeld nog het beschrijven van foto’s van een studentenvereniging, eigenlijk niets dus. Metadata is eigenlijk alleen nog een onderwerp voor een kleine groep specialisten.”

Hoe provocerend deze observatie ook was, dit weerspiegelt wel een realiteit die we moeten erkennen en tegelijkertijd in perspectief moeten plaatsen. Daar was helaas geen tijd voor, want de sessie liep ten einde. Het was zeker een gesprek waar we nog een tijd hadden kunnen doorpraten!

In maart 2021 hield OCLC Research een discussiereeks gericht op twee rapporten:

De rondetafelgesprekken werden gehouden in verschillende Europese talen en de deelnemers konden hun eigen ervaringen delen, een beter begrip krijgen van het onderwerp en kregen handvatten om vol vertrouwen plannen te maken voor de toekomst. .

De plenaire openingssessie opende de vloer voor discussie en verkenning en introduceerde het thema en de bijbehorende onderwerpen. Samenvattingen van alle rondetafelgesprekken worden gepubliceerd op de OCLC Research-blog Hanging Together.

Op de afsluitende plenaire vergadering op 13 april werden de verschillende rondetafelgesprekken samengevat.

The post Nederlandse ronde tafel sessie over next generation metadata: Denk groter dan NACO en WorldCat appeared first on Hanging Together.

On Saturday 6th March 2021, the eleventh Open Data Day took place with people around the world organising over 300 events to celebrate, promote and spread the use of open data.

Thanks to the generous support of this year’s mini-grant funders –Microsoft, UK Foreign, Commonwealth and Development Office, Mapbox, Global Facility for Disaster Reduction and Recovery, Latin American Open Data Initiative, Open Contracting Partnership and Datopian – the Open Knowledge Foundation offered more than 60 mini-grants to help organisations run online or in-person events for Open Data Day.

We captured some of the great conversations across Asia/the Pacific, Europe/Middle East/Africa and the Americas using Twitter Moments.

Below you can discover all the organisations supported by this year’s scheme as well as seeing photos/videos and reading their reports to help you find out how the events went, what lessons they learned and why they love Open Data Day:

Thanks to everyone who organised or took part in these celebrations and see you next year for Open Data Day 2022!

Need more information?

If you have any questions, you can reach out to the Open Knowledge Foundation’s Open Data Day team by emailing opendataday@okfn.org or on Twitter via @OKFN.

2021-04-23T11:04:55+00:00 Stephen Abbott Pugh Digital Library Federation: The #DLFteach Toolkit: Participatory Mapping In a Pandemic https://www.diglib.org/the-dlfteach-toolkit-participatory-mapping-in-a-pandemic/ This post was written by Jeanine Finn (Claremont Colleges Library), as part of Practitioner Perspectives: Developing, Adapting, and Contextualizing the #DLFteach Toolkit, a blog series from DLF’s Digital Library Pedagogy group highlighting the experiences of digital librarians and archivists who utilize the #DLFteach Toolkit and are new to teaching and/or digital tools.

This post was written by Jeanine Finn (Claremont Colleges Library), as part of Practitioner Perspectives: Developing, Adapting, and Contextualizing the #DLFteach Toolkit, a blog series from DLF’s Digital Library Pedagogy group highlighting the experiences of digital librarians and archivists who utilize the #DLFteach Toolkit and are new to teaching and/or digital tools.

The Digital Library Pedagogy working group, also known as #DLFteach, is a grassroots community of practice, empowering digital library practitioners to see themselves as teachers and equip teaching librarians to engage learners in how digital library technologies shape our knowledge infrastructure. The group is open to anyone interested in learning about or collaborating on digital library pedagogy. Join our Google Group to get involved.

See the original lesson plan in the #DLFteach Toolkit.

Our original activity was designed around using a live GoogleSheet in coordination with ArcGIS Online to collaboratively map historic locations for an in-class lesson to introduce students to geospatial analysis concepts. In our example, a history instructor had identified a list of cholera outbreaks with place names from 18th-century colonial reports.

In the original activity, students were co-located in a library classroom, reviewing the historic cholera data in groups. A Google Sheet was created and shared with everyone in the class for students to enter “tidied” data from the historic texts collaboratively. The students then worked with a live link from Google Sheets, allowing the outbreak locations to be served directly to the ArcGIS Online map. It was successful and a useful tool for encouraging engagement and for getting familiar with GIS.

Then COVID-19 in 2020 arrived. Instead of a centuries-distant disease outbreak, students learning digital mapping this past year were thrust into socially-distant instructional settings driven by a contemporary pandemic that radically altered their modes of learning. The collaborative affordances of tools like ArcGIS Online were pressed into service to help students collaborate effectively and meaningfully in real-time while learning from home.

As an example, one geology professor at Pomona College encouraged her students to explore the geology of their local environment. Building on shared readings and lectures on geologic history and rock formations, students were encouraged to research the history of the land around them, and include photographs, observations, and other details to enrich the ArcGIS StoryMap. The final map included photographs and geology facts from students’ home locations around the world.

Header for Geology class group StoryMap at Pomona College, Fall 2020

Header for Geology class group StoryMap at Pomona College, Fall 2020

A key feature of the ArcGIS StoryMap platform that appealed to the instructor was the ability for the students to work collaboratively on the platform itself — not across shared files on folders on Box, GSuite, the LMS, etc. While this functioned reasonably well, there were several roadblocks to effective collaboration that we encountered along the way. Most of the challenges related to permissions settings related to ArcGIS Online administration, as the “shared update” features are not set as default permissions. Other challenges included file size limitations for images the students wished to upload, the inability of more than one user to edit the same file simultaneously, and potential security issues (including firewalls) in nations with more restrictive internet laws.

Reflecting on these uses of StoryMaps over this past semester, we encourage instructors and library staff interested in to:

Resources:

Participatory Mapping with Google Forms, Google Sheets, and ArcGIS Online (Esri community education blog): https://community.esri.com/t5/education-blog/participatory-mapping-with-google-forms-google-sheets-and-arcgis/ba-p/883782

Optimize group settings to share stories like never before (Esri ArcGIS blog): https://www.esri.com/arcgis-blog/products/story-maps/constituent-engagement/optimize-group-settings-to-share-stories-like-never-before/

Teach with Story Maps: Announcing the Story Maps Curriculum Portal (University of Minnesota, U-Spatial: https://research.umn.edu/units/uspatial/news/teach-story-maps-announcing-story-maps-curriculum-portal

Getting Started with ArcGIS StoryMaps (Esri): https://storymaps.arcgis.com/stories/cea22a609a1d4cccb8d54c650b595bc4

VI Conclusion recommendations

Gather materials ahead of time. Photographs from digital archives, maps

There may be data cleaning issues.

The post The #DLFteach Toolkit: Participatory Mapping In a Pandemic appeared first on DLF.

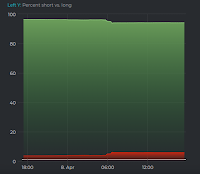

2021-04-22T19:11:26+00:00 Gayle David Rosenthal: Dogecoin Disrupts Bitcoin! https://blog.dshr.org/2021/04/dogecoin-disrupts-bitcoin.html Two topics I've posted about recently, Elon Musk's cult and the illusory "prices" of cryptocurrencies, just intersected in spectacular fashion. On April 14 the Bitcoin "price" peaked at $63.4K. Early on April 15, the Musk cult saw this tweet from their prophet. Immediately, the Dogecoin "price" took off like a Falcon 9.

Two topics I've posted about recently, Elon Musk's cult and the illusory "prices" of cryptocurrencies, just intersected in spectacular fashion. On April 14 the Bitcoin "price" peaked at $63.4K. Early on April 15, the Musk cult saw this tweet from their prophet. Immediately, the Dogecoin "price" took off like a Falcon 9.Dogecoin — the crypto token that was started as a joke and that is the favourite of Elon Musk — is having a bit of a moment. And when we say a bit of a moment, we mean that it is on a lunar trajectory (in crypto talk: it is going to da moon).The headlines tell the story — Timothy B. Lee's Dogecoin has risen 400 percent in the last week because why not and Joanna Ossinger's Dogecoin Rips in Meme-Fueled Frenzy on Pot-Smoking Holiday.

At the time of writing this, it is up over 200 per cent in the past 24 hours — more than tripling in value (for those of you who need help on percentages, it is Friday afternoon after all). Over the past week it’s up more than 550 per cent (almost seven times higher!).

The Dogecoin "price" graph Kelly posted was almost vertical. The same day, Peter Schiff, the notorious gold-bug, tweeted:

The Dogecoin "price" graph Kelly posted was almost vertical. The same day, Peter Schiff, the notorious gold-bug, tweeted:So far in 2021 #Bitcoin has lost 97% of its value verses #Dogecoin. The market has spoken. Dogecoin is eating Bitcoin. All the Bitcoin pumpers who claim Bitcoin is better than gold because its price has risen more than gold's must now concede that Dogecoin is better than Bitcoin.Below the fold I look back at this revolution in crypto-land.

Bitcoin’s hashrate dropped 25% from all-time highs after an accident in the Xinjiang region’s mining industry caused flooding and a gas explosion, leading to 12 deaths with 21 workers trapped since.The drop in the hash rate had the obvious effects. David Gerard reports:

...

The leading Bitcoin mining data centers in the region have closed operations to comply with the fire and safety inspections.

The Chinese central authority is conducting site inspections “on individual mining operations and related local government agencies,” tweeted Dovey Wan, partner at Primitive Crypto.

...

The accident has reignited the centralization problems arising from China’s dominance of the Bitcoin mining sector, despite global expansion efforts.

The Bitcoin hash rate dropped from 220 exahashes per second to 165 EH/s. The rate of new blocks slowed. The Bitcoin mempool — the backlog of transactions waiting to be processed — has filled. Transaction fees peaked at just over $50 average on 18 April.The average BTC transaction fee is now just short of $60, with a median fee over $26! The BTC blockchain did around 350K transactions on April 15, but on April 16 it could only manage 190K.

Dogecoin’s rise is a classic example of greater fool theory at play, Dogecoin investors are basically betting they’ll be able to cash out by selling to the next person wanting to invest. People are buying the cryptocurrency, not because they think it has any meaningful value, but because they hope others will pile in, push the price up and then they can sell off and make a quick buck.Kelly also quotes Khadim Shubber explaining that this is all just entertainment:

But when everyone is doing this, the bubble eventually has to burst and you’re going to be left short-changed if you don’t get out in time. And it’s almost impossible to say when that’s going to happen.

Bitcoin, and cryptocurrencies in general, are not directly analogous to the fairly mundane practice of buying a Lottery ticket, but this part of its appeal is often ignored in favour of more intellectual or high-brow explanations.Note the importance of volatility. In a must-read interview that New York Magazine entitled BidenBucks Is Beeple Is Bitcoin Prof. George Galloway also stressed the importance of volatility:

It has all the hallmarks of a fun game, played out across the planet with few barriers to entry and all the joy and pain that usually accompanies gambling.

There’s a single, addictive reward system: the price. The volatility of cryptocurrencies is often highlighted as a failing, but in fact it’s a key part of its appeal. Where’s the fun in an asset whose price snoozes along a predictable path?

The rollercoaster rise and fall and rise again of the crypto world means that it’s never boring. If it’s down one day (and boy was it down yesterday) well, maybe the next day it’ll be up again.

Young people want volatility. If you have assets and you’re already rich, you want to take volatility down. You want things to stay the way they are. But young people are willing to take risks because they can afford to lose everything. For the opportunity to double their money, they will risk losing everything. Imagine a person who has the least to lose: He’s in solitary confinement in a supermax-security prison. That person wants maximum volatility. He prays for such volatility, that there’s a revolution and they open the prison.This all reinforces my skepticism about the "price" and "market cap" of cryptocurrencies. 2021-04-22T16:00:00+00:00 David. (noreply@blogger.com) David Rosenthal: What Is The Point? https://blog.dshr.org/2021/04/what-is-point.html During a discussion of NFTs, Larry Masinter pointed me to his 2012 proposal The 'tdb' and 'duri' URI schemes, based on dated URIs. The proposal's abstract reads:

People under the age of 40 are fed up. They have less than half of the economic security, as measured by the ratio of wealth to income, that their parents did at their age. Their share of overall wealth has crashed. A lot of them are bored. A lot of them have some stimulus money in their pocket. And in the case of GameStop, they did what’s kind of a mob short squeeze.

...

I see crypto as a mini-revolution, just like GameStop. The central banks and governments are all conspiring to create more money to keep the shareholder class wealthy. Young people think, That’s not good for me, so I’m going to exit the ecosystem and I’m going to create my own currency.

This document defines two URI schemes. The first, 'duri' (standingAs far as I can tell, this proposal went nowhere, but it raises a question that is also raised by NFTs. What is the point of a link that is unlikely to continue to resolve to the expected content? Below the fold I explore this question.

for "dated URI"), identifies a resource as of a particular time.

This allows explicit reference to the "time of retrieval", similar to

the way in which bibliographic references containing URIs are often

written.

The second scheme, 'tdb' ( standing for "Thing Described By"),

provides a way of minting URIs for anything that can be described, by

the means of identifying a description as of a particular time.

These schemes were posited as "thought experiments", and therefore

this document is designated as Experimental.

There are many URIs which are, unfortunately, not particularlyPersonalization, advertisements, geolocation, watermarks, all make it very unlikely that either several clients accessing the same URI at the same time, or a single client accessing the same URI at different times, would see the same content.

"uniform", in the sense that two clients can observe completely

different content for the same resource, at exactly the same time.

There are no direct resolution servers or processes for 'duri' orBut the duri: URI doesn't provide the information needed to resolve to the "cached or archived" content. The Internet Archive's Wayback Machine uses URIs which, instead of the prefix duri:[datetime]: have the prefix https://web.archive.org/web/[datetime]/. This is more useful, both because browsers will actually resolve these URIs, and because they resolve to a service devoted to delivering the content of the URI at the specified time.

'tdb' URIs. However, a 'duri' URI might be "resolvable" in the sense

that a resource that was accessed at a point in time might have the

result of that access cached or archived in an Internet archive

service. See, for example, the "Internet Archive" project

Photo by Bálint Szabó on Unsplash

Photo by Bálint Szabó on UnsplashAs the COVID pandemic grinds on, vaccinations are top of mind. A recent article published in JAMA Network Open examined whether vaccination clinical trials over the last decade adequately represented various demographic groups in their studies. According to the authors, the results suggested they did not: “among US-based vaccine clinical trials, members of racial/ethnic minority groups and older adults were underrepresented, whereas female adults were overrepresented.” The authors concluded that “diversity enrollment targets should be included for all vaccine trials targeting epidemiologically important infections.”

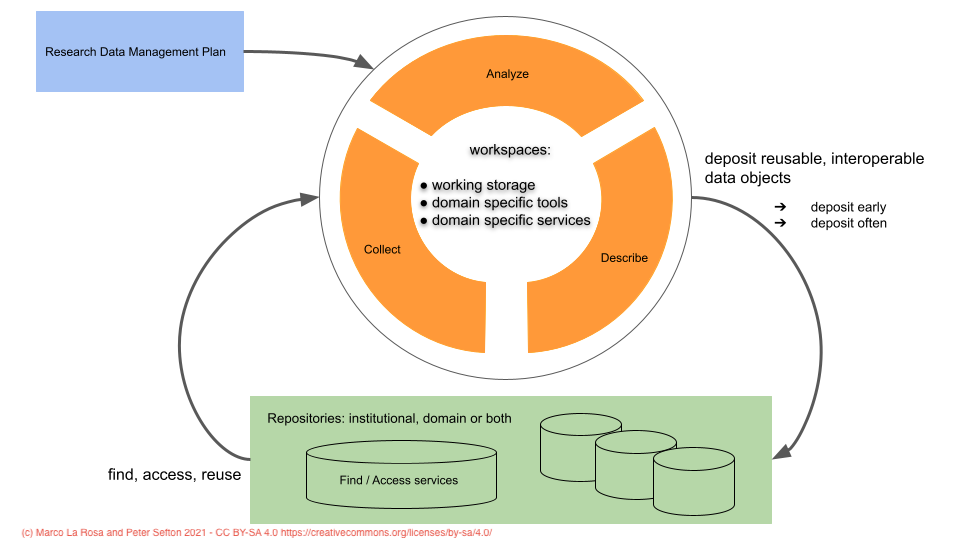

My colleague Rebecca Bryant and I recently enjoyed an interesting and thought-provoking conversation with Dr. Tiffany Grant, Assistant Director for Research and Informatics with the University of Cincinnati Libraries (an OCLC Research Library Partnership member) on the topic of bias in research data. Dr. Grant neatly summed up the issue by observing that data collected should be inclusive of all the groups who are impacted by outcomes. As the JAMA article illustrates, that is clearly not always the case – and the consequences can be significant for decision- and policy-making in critical areas like health care.

The issue of bias in research data has been acknowledged for some time; for example, the launch of the Human Genome Project in the late 1990s/early 2000s helped raise awareness of the problem, as did observed differences in health care outcomes across demographic groups. And efforts are underway to help remedy some of the gaps. One initiative, the US National Institutes of Health’s All of Us Research Program, aims to build a database of health data collected from a diverse cohort of at least one million participants. The rationale for the project is clearly laid out: “To develop individualized plans for disease prevention and treatment, researchers need more data about the differences that make each of us unique. Having a diverse group of participants can lead to important breakthroughs. These discoveries may help make health care better for everyone.”

Extrapolation of findings observed in one group to all other groups often leads to poor inferences, and researchers should take this into account when designing data collection strategies. The peer review process should act as a filter for identifying research studies that overlook this point in their design – but how well is it working? As in many other aspects of our work and social lives, unconscious bias may play a role here: lack of awareness of the problem on the part of reviewers means that studies with flawed research designs may slip through.

And that leads us to what Dr. Grant believes is the principal remedy for the problem of bias in research data: education. Researchers need training that helps them recognize potential sources of bias in data collection, as well as understand the implications of bias for interpretation and generalization of their findings. The first step in solving a problem is to recognize that there is a problem. Some disciplines are further along than others in addressing bias in research data, but in Dr. Grant’s view, there is still ample scope for raising awareness across campus about this topic.

Academic libraries can help with this, by providing workshops and training programs, and gathering relevant information resources. At the University of Cincinnati, librarians are often embedded in research teams, providing an excellent opportunity to share their expertise on this issue. Raising awareness about bias in research data is also an opportunity to partner with other campus units, such as the office of research, colleges/schools, and research institutes (for more information on how to develop and sustain cross-campus partnerships around research support services see our recent OCLC Research report on social interoperability).

Many institutions are currently implementing Equality, Diversity, and Inclusion (EDI) training, and modules addressing bias in research data might be introduced as part of EDI curricula for researchers. This could also be an area of focus for professional development programs supporting doctoral, postdoctoral, and other early-career researchers. It seems that many EDI initiatives focus on issues related to personal interactions or recruiting more members of underrepresented groups into the field. For researchers, it may be useful to supplement this training with additional programs that focus on EDI issues as they specifically relate to the responsible conduct of research. In other words, how do EDI-related issues manifest in the research process, and how can researchers effectively address them? A great example is the training offered by We All Count, a project aimed at increasing equity in data science.

Funders can also contribute toward mitigating bias in research data, by issuing research design guidelines on inclusion of underrepresented groups, and by establishing criteria for scoring grant proposals on the basis of how well these guidelines are addressed. The big “carrots and sticks” wielded by funders are a powerful tool for both raising awareness and shifting behaviors.

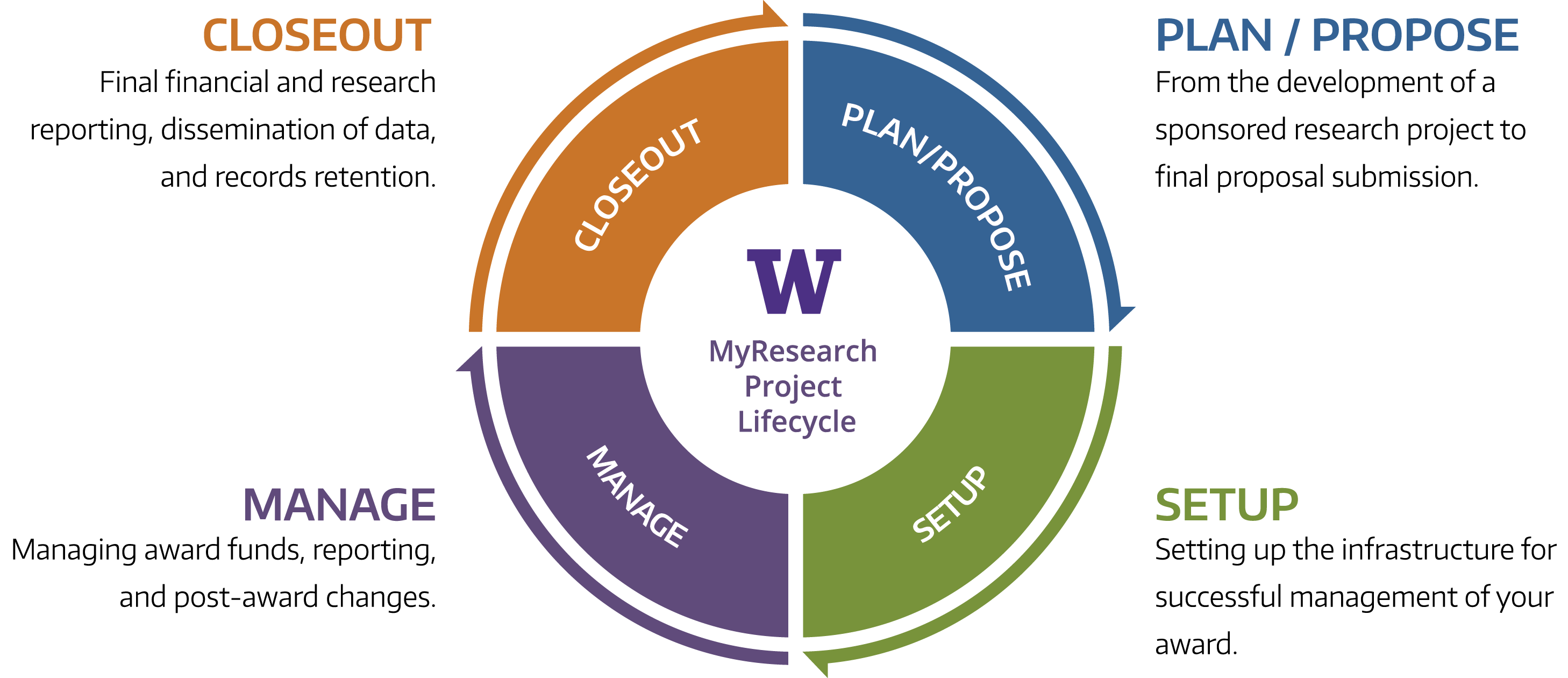

Bias in research data extends to bias in research data management (RDM). Situations where access to and ability to use archived data sets is not equitable is another form of bias. While it is good to mandate that data sets be archived under “open” conditions, as many funders already do, the spirit of the mandate is compromised if the data sets are put into systems that are not accessible and usable to everyone. It is important to recognize that the risk of introducing bias into research data exists throughout the research lifecycle, including curation activities such as data storage, description, and preservation.

Our conversation focused on bias in research data in STEM fields – particularly medicine – but the issue also deserves attention in the context of the social sciences, as well as the arts and humanities. Our summary here highlights just a sample of the topics worthy of discussion in this area, with much to unpack in each one. We are grateful to Dr. Grant for starting a conversation with us on this important issue and look forward to continuing it in the future as part of our ongoing work on RDM and other forms of research support services.

Like so many other organizations, OCLC is reflecting on equity, diversity, and inclusion, as well as taking action. Check out an overview of that work, and explore efforts being undertaken in OCLC’s Membership and Research Division. Thanks to Tiffany Grant, Rebecca Bryant, and Merrilee Proffitt for providing helpful suggestions that improved this post!

The post Recognizing bias in research data – and research data management appeared first on Hanging Together.

Learn how AI-powered recommenders put the right products and content in front of your customers, with just the right amount of human touch.

The post Enhance Product Discovery with AI-Powered Recommenders appeared first on Lucidworks.

2021-04-21T15:35:11+00:00 Andy Wibbels Tara Robertson: Distributing DEI Work Across the Organization https://tararobertson.ca/2021/distributing-dei-work-across-the-organization/I enjoyed being a guest on Seed&Spark‘s first monthly office hours session where Stefanie Monge, Lara McLeod and I talked about distributing diversity, equity and inclusion work across organizations.

The post Distributing DEI Work Across the Organization appeared first on Tara Robertson Consulting.

2021-04-20T17:17:50+00:00 Tara Robertson Terry Reese: Thoughts on NACOs proposed process on updating CJK records https://blog.reeset.net/archives/2967I would like to take a few minutes and share my thoughts about an updated best practice recently posted by the PCC and NACO related to an update on CJK records. The update is found here: https://www.loc.gov/aba/pcc/naco/CJK/CJK-Best-Practice-NCR.docx. I’m not certain if this is active or a simply a proposal, but I’ve been having a number of private discussions with members at the Library of Congress and the PCC as I’ve been trying to understand the genesis for this policy change. I personally believe that formally adopting a policy like this would be exceptionally problematic, and I wanted to flesh out my thoughts on why and some potential better options that could fix the issue that this problem is attempting to solve.

But first, I owe some folks an apology. In chatting with some folks at LC (because, let’s be clear, this proposal was created specifically because there are local, limiting practices at LC that artificially are complicating this work) – it came to my attention that the individuals that spent a good deal of time considering and creating this proposal have received some unfair criticism – and I think I bare a lot of responsibility for that. I have done work creating best practices and standards and its thankless, difficult work. Because of that, in cases where I disagree with a particular best practice, my preference has been to address those privately and attempt to understand and share my issues with a set of practices. This is what I have been doing related to this work. However, on the MarcEdit list (a private list), when a request was made related to a feature request in MarcEdit to support this work – I was less thoughtful in my response as the proposed change could fundamentally undo almost a decade of work as I have dealt with thousands of libraries stymied by these kinds of best practices that have significant unintended consequences. My regret is that I’ve been told that my thoughts shared on the MarcEdit list, have been used by others in more public spaces to take this committee’s work to task. This is unfortunate and disappointing, and something I should have been more thoughtful of in my responses on the MarcEdit list. Especially, given that every member of that committee is doing this work as a service to the community. I know I forget that sometimes. So, to the folks that did this work – I’ve not followed (or seen) any feedback you may have received, but in as much that I’m sure I played a part in any push back you may have received, I’m sorry.

If you look at the proposal, I think that the writers do a good job identifying the issue. Essentially, this issue is unique to authority records. At present, NACO still requires that records created within the program only utilize UTF8 characters that fall within the MARC-8 repertoire. OCLC, the pipeline for creating these records, enforces this rule by invalidating records with UTF8 characters outside the MARC8 range. The proposal seeks to address this by encouraging the use of NRC (Numeric Character Reference) data in UTF8 records, to work around these normalization issues.

So, in a nutshell, that is the problem, and that is the proposed solution. But before we move on, let’s talk a little bit about how we got here. This problem currently exists because of, what I believe to be, an extremely narrow and unproductive read of what MARC8 repertoire actually means. For those not in Libraries, MARC8 is essentially a made-up character encoding, used only in libraries, that has so outlived its usefulness. Modern systems have largely stopped supporting it outside of legacy ingest workflows. The issue is that for every academic library or national library that has transitioned to UTF8, hundreds of small libraries or organizations around the world have not. MARC8 continues to exist because the infrastructure that supports these smaller libraries is built around it.

But again, I think it is worth thinking about today, what actually is the MARC8 repertoire. Previously, this had been a hard set of defined values. But really, that changed in 2004ish when LC updated guidance and introduced the concept of NRCs to preserve lossless data transfer between systems that were fully UTF8 compliant and older MARC8 systems. NRCs in MARC8 were workable, because it left local systems the ability to handle (or not handle) the data as it seen fit and finally provided an avenue for the Library community as a whole to move on from the limitations MARC8 was imposing on systems. It allowed for the facilitation of data into non-MARC formats that were UTF8 compliant and provided a pathway to allow data from other metadata formats, the ability to reuse that data in MARC records. I would argue that today, the MARC8 repertoire includes NRC notation – and to assume or pretend otherwise, is shortsighted and revisionist.

But why is all of this important. Well, it is at the heart of the problem that we find ourselves in. For authority data, the Library of Congress appears to have adopted this very narrow view of what MARC8 means (against their own stated recommendations) and as a result, NACO and OCLC place artificial limits on the pipeline. There are lots of reasons why LC does this, I recognize they are moving slowly because any changes that they make are often met with some level of resistance from members of our community – but in this case, this paralysis is causing more harm to the community than good.

So, this is the environment that we are working in and the issue this proposal sought to solve. The issue, however, is that the proposal attempts to solve this problem by adopting a MARC8 solution and applying it within UTF8 data – essentially making the case that NRC values can be embedded in UTF8 records to ensure lossless data entry. And while I can see why someone might think that – that assumption is fundamentally incorrect. When LC developed its guidance on NRC notation, this was guidance that was specifically directed in the lossless translation of data to MARC8. UTF8 data has no need for NRC notation. This does not mean that it does not sometimes show up – and as a practical purpose, I’ve spent thousands of hours working with Libraries dealing with the issues this creates in local systems. Aside from the issues this creates in MARC systems around indexing and discovery, it makes data almost impossible to be used outside of that system and in times of migration. In thinking about the implications of this change in the context of MarcEdit, I had the following, specific concerns:

While I very much appreciate the issue that this is attempting to solve, I’ve spent years working with libraries where this kind of practice would introduce a long-term data issue that is very difficult to identify and fix and often shows up unexpectedly when it comes time to migration or share this information with other services, communities, or organizations.

I think that we can address this issue on two fronts. First, I would advise NACO and OCLC to essentially stop limiting data entry to this very limited notion of MARC8 repertoire. In all other contexts, OCLC provides the ability to enter any valid UTF8 data. This current limit within the authority process is artificial and unnecessary. OCLC could easily remove it, and NACO could amend their process to allow record entry to utilize any valid UTF8 character. This would address the problem that this group was attempting to solve for catalogers creating these records.

The second step could take two forms. If LC continues to ignore their own guidance and cleave to an outdated concept of the MARC8 repertoire – OCLC could provide to LC via their pipeline a version of the records where data includes NRC notation for use in LCs own systems. It would mean that I would not recommend using LC as a trusted system for downloading authorities if this was the practice unless I had an internal local process to remove any NRC data found in valid UTF8 records. Essentially, we essentially treat LC’s requirements as a disease and quarantine them and their influence in this process. Of course, what would be more ideal, is LC making the decision to accept UTF8 data without restrictions and rely on applicable guidance and MARC21 best practice by supporting UTF8 data fully, and for those still needing MARC8 data – providing that data using the lossless process of NRCs (per their own recommendations).

Ultimately, this proposal is a recognition that the current NACO rules and process is broken and broken in a way that it is actively undermining other work in the PCC around linked data development. And while I very much appreciate the thoughtful work that went into the consideration of a different approach, I think the unintended side affects would cause more long-term damage that any short-term gains. Ultimately, what we need is for the principles to rethink why these limitations are in place, and, honestly, really consider ways that we start to deemphasize the role LC plays as a standard holder if in that role, LC’s presence continues to be an impediment for moving libraries forward.

2021-04-20T16:56:13+00:00 reeset Lucidworks: How to Deliver Impactful Digital Commerce Experiences https://lucidworks.com/post/deliver-relevant-digital-commerce-experiences/Acquia and Lucidworks share tips for how to deliver meaningful and relevant digital commerce experiences that create customer connections.

The post How to Deliver Impactful Digital Commerce Experiences appeared first on Lucidworks.

2021-04-20T16:32:04+00:00 Jenny Gomez HangingTogether: Accomplishments and priorities for the OCLC Research Library Partnership http://feedproxy.google.com/~r/Hangingtogetherorg/~3/sV5OSw6YBAI/With 2021 well underway, the OCLC Research Library Partnership is as active as ever. We are heartened by the positive feedback and engagement our Partners have provided in response to our programming and research directions. Thank you to those who have shared your stories of success and challenge; listening to your voices is what guides us and drives us forward. We warmly welcome the University of Notre Dame, University of Waterloo, and OCAD University into the Partnership and are pleased to see how they have jumped right into engagement with SHARES and other activities.

Photo by Caleb Chen on Unsplash

Photo by Caleb Chen on UnsplashThe SHARES community has been a source of support and encouragement as resource sharing professionals around the world strive to meet their communities’ information needs during COVID-19. During the last year, Dennis Massie has convened more than 50 SHARES town halls to date to learn how SHARES members are changing practice to adapt to quickly evolving circumstances. Dennis has documented how resource sharing practices have changed.

Inspired by the SHARES community, we are also excited to have launched the OCLC Interlibrary Loan Cost Calculator. For library administrators and funders to evaluate collection sharing services properly, they need access to current cost information, as well as benchmarks against which to measure their own library’s data. The Cost Calculator is a free online tool that has the potential to act as a virtual real-time ILL cost study. Designed in collaboration with resource sharing experts and built by OCLC Research staff, the calculator has been in the hands of beta testers and early adopters since October 2019. A recorded webinar gives a guided tour of what the tool does (and does not do), what information users need to gather, how developers addressed privacy issues, and how individual institutions and the library community can benefit.

A big thanks to our Partners who contributed to the Total Cost of Stewardship: Responsible Collection Building in Archives and Special Collections. This publication addresses the ongoing challenge of descriptive backlogs in archives and special collections by connecting collection development decisions with stewardship responsibilities. The report proposes a Total Cost of Stewardship framework for bringing together these important, interconnected functions. Developed by the RLP’s Collection Building and Operational Impacts Working Group, the Total Cost of Stewardship Framework is a model that considers the value of a potential acquisition and its alignment with institutional mission and goals alongside the cost to acquire, care for, and manage it, the labor and specialized skills required to do that work, and institutional capacity to care for and store collections.

This publication includes a suite of communication and cost estimation tools to help decision makers assess available resources, budgets, and timelines to plan with confidence and set realistic expectations to meet important goals. The report and accompanying resources provide special collections and archives with tools to support their efforts to meet the challenges of contemporary collecting and to ensure they are equitably serving and broadly documenting their communities.

In December, we had a bittersweet moment celebrating Senior Program Officer Karen Smith-Yoshimura’s retirement. As Mercy Procaccini and others take over the role of coordinating the stalwart Metadata Managers Focus Group, we are taking time to refine how this dynamic group works and plans future discussions together to better support their efforts. A synthesis of this group’s discussions from the past six years traces how metadata services are transitioning to the “next generation of metadata.”

The RLP’s commitment to advancing learning and operational support for linked data continues with the January publication of Transforming Metadata into Linked Data to Improve Digital Collection Discoverability: A CONTENTdm Pilot Project. The report details a pilot project that investigated methods for—and the feasibility of—transforming metadata into linked data to improve the discoverability and management of digitized cultural materials and their descriptions. Five institutions partnered with OCLC to collaborate on this linked data project, representing a diverse cross-section of different types of institutions: The Cleveland Public Library The Huntington Library, Art Museum, and Botanical Gardens The Minnesota Digital Library Temple University Libraries University of Miami Libraries.

OCLC has invested in pathbreaking linked data work for over a decade, and it is wonderful to add the publication to this knowledge base.

In the area of research support, Rebecca Bryant developed a robust series of webinars as a follow-on to the 2019–2020 OCLC Research project, Social Interoperability in Research Support. The resulting report, Social Interoperability in Research Support: Cross-campus Partnerships and the University Research Enterprise, synthesizes information about the highly decentralized, complex research support ecosystem at US research institutions. The report additionally offers a conceptual model of campus research support stakeholders and provides recommendations for establishing and stewarding successful cross-campus relationships. The social interoperability webinar series complements this work by offering in-depth case studies and “stakeholder spotlights” from RLP institutions, demonstrating how other campus are eager to collaborate with the library. This is a great example of the type of programming you can find in our Works in Progress Webinar Series.

Our team has been digging into issues of equity, diversity, and inclusion: we’ve developed a “practice group” to help our team be better situated to engaging in difficult conversations around race, and we also have been learning and engaging in conversations about the difficulty of cataloging topics relating to Indigenous peoples in respectful ways.

This work has helped to prepare the way for important new work that I’m pleased to share with you today. OCLC will be working in consultation with Shift Collective on The Andrew W. Mellon-funded convening, Reimagine Descriptive Workflows. The project will bring together a wide range of community stakeholders to interrogate the existing descriptive workflow infrastructure to imagine new workflows that are inclusive, equitable, scalable, and sustainable. We are following an approach developed in other work we have carried out, such as the Research and Learning Agenda for Archives, Special, and Distinctive Collections in Research Libraries, and more recently, in Responsible Operations: Data Science, Machine Learning, and AI in Libraries. In that vein, we will host a virtual convening later this year to inform a Community Agenda publication.

Reimagine Descriptive Workflows is the next stage of a journey that we’ve been on for some time, informed by numerous webinars, surveys, and individual conversations. I am very grateful to team members and the RLP community for their contributions and guidance. We are truly “learning together.”

If you are at an OCLC RLP affiliated institution and would like to learn more about how to get the most out of your RLP affiliation, please contact your staff liaison (or anyone on our energetic team) and we be happy to set up a virtual orientation or refresher on our programs and opportunities for active learning.

It is with deep gratitude that I offer my thanks to to our Partners for their investment in the Research Library Partnership. We are committed to offering our very best to serve your research and learning needs.

The post Accomplishments and priorities for the OCLC Research Library Partnership appeared first on Hanging Together.

This week, five shortlisted teams took part in the final stage of the Net Zero Challenge – a global competition to identify, promote and support innovative, practical and scalable uses of open data that advance climate action.

The five teams presented their three-minute project pitches to the Net Zero Challenge Panel of Experts, and a live audience. Each pitch was followed by a live Q&A.

The winner of the pitch contest will be announced in the next few days.

If you didn’t have the chance to attend the event in person, watch the event here (46.08.min) or see below for links to individual pitches.

A full unedited video of the event is at the bottom of this page.

Introduction – by James Hamilton, Director of the Net Zero Challenge

Watch video here (4.50min) // Introduction Slide Deck

Pitch 1 – by Matt Sullivan from Snapshot Climate Tool which provides greenhouse gas emission profiles for every local government region (municipality) in Australia.

Watch pitch video here (10.25min) // Snapshot Slide Deck

Pitch 2 – by Saif Shabou from CarbonGeoScales which is a framework for standardising open data for green house gas emissions at multiple geographical scales (built by a team from France).

Watch pitch video here (9.07min) // CarbonGeoScales Slide Deck

Pitch 3 – by Jeremy Dickens. He presents Citizen Science Avian Index for Sustainable Forests a new bio monitoring tool that uses open data on bird observations to provide crucial information on forest ecological conditions (from South Africa).

Watch pitch video here (7.03min) // Avian Index – Slide Deck

Pitch 4 – by Cristian Gregorini from Project Yarquen which is a new API tool and website to organise climate relevant open data for use by civil society organisations, environmental activists, data journalists and people interested in environmental issues (built by a team from Argentina).

Watch pitch video here (8.20min)

Pitch 5 – by Beatriz Pagy from Clima de Eleição which analyses recognition of climate change issues by prospective election candidates in Brazil, enabling voters to make informed decisions about who to vote in to office.

Watch pitch video here (5.37min) // Clima de Eleição – Slide Deck

Concluding remarks – by James Hamilton, Director of the Net Zero Challenge

A full unedited video of the Net Zero Challenge is here (55.28min)

There are many people who collaborated to make this event possible.

We wish to thank both Microsoft and the UK Foreign, Commonwealth & Development Office for their support for the Net Zero Challenge. Thanks also to Open Data Charter and the Open Data & Innovation Team at Transport for New South Wales for their strategic advice during the development of this project. The event would not have been possible without the enthusiastic hard work of the Panel of Experts who will judge the winning entry, and the audience who asked such great questions. Finally – to all the pitch teams. Your projects inspire us and we hope your participation in the Net Zero Challenge has been – and will continue to be – supportive for your work as you use open data to advance climate action.

2021-04-19T09:43:32+00:00 James Hamilton Hugh Rundle: A barbaric yawp https://www.hughrundle.net/a-barbaric-yawp/Over the Easter break I made a little Rust tool for sending toots and/or tweets from a command line. Of course there are dozens of existing tools that enable either of these, but I had a specific use in mind, and also wanted a reasonably small and achievable project to keep learning Rust.

For various reasons I've recently been thinking about the power of "the Unix philosophy", generally summarised as:

- Write programs that do one thing and do it well.

- Write programs to work together.

- Write programs to handle text streams, because that is a universal interface.

My little program takes a text string as input, and sends the same string to the output, the intention being not so much that it would normally be used manually on its own (though it can be) but more that it can "work together" with other programs or scripts. The "one thing" it does (I will leave the question of "well" to other people to judge) is post a tweet and/or toot to social media. It's very much a unidirectional, broadcast tool, not one for having a conversation. In that sense, it's like Whitman's "Barbaric yawp", subject of my favourite scene in Dead Poets Society and a pretty nice description of what social media has become in a decade or so. Calling the program yawp therefore seemed fitting.

yawp takes text from standard input (stdin), publishes that text as a tweet and/or a toot, and then prints it to standard output (stdout). Like I said, it's not particularly complex, and not even all that useful for your daily social media posting needs, but the point is for it to be part of a tool chain. For this reason yawp takes the configuration it needs to interact with the Mastodon and Twitter APIs from environment (ENV) variables, because these are quite easy to set programatically and a fairly "universal interface" for setting and getting values to be used in programs.

Here's a simple example of sending a tweet:

yawp 'Hello, World!' -tWe could also send a toot by piping from the echo program (the - tells yawp to use stdin instead of looking for an argument like it uses above):

echo 'Hello again, World!' | yawp - -mIn bash, you can send the contents of a file to stdin, so we could do this too:

yawp - -mt <message.txtBut really the point is to use yawp to do something like this:

app_that_creates_message | yawp - -mt | do_something_else.sh >> yawping.logAnyway, enjoy firing your barbaric yawps into the cacophony.

“Let’s blog every Friday,” I thought. “It’ll be great. People can see what I’m doing with ML, and it will be a useful practice for me!” And then I went through weeks on end of feeling like I had nothing to report because I was trying approach after approach to this one problem that simply didn’t work, hence not blogging. And finally realized: oh, the process is the thing to talk about…

Hi. I’m Andromeda! I am trying to make a neural net better at recognizing people in archival photos. After running a series of experiments — enough for me to have written 3,804 words of notes — I now have a neural net that is ten times worse at its task.

And now I have 3,804 words of notes to turn into a blog post (a situation which gets harder every week). So let me catch you up on the outline of the problem:

Step 3: profit, right? Well. Let me also catch you up on some problems along the way:

Archival photos are great because they have metadata, and metadata is like labels, and labels mean you can do supervised learning, right?

Well….

Is he “Du Bois, W. E. B. (William Edward Burghardt), 1868-1963” or “Du Bois, W. E. B. (William Edward Burghardt) 1868-1963” or “Du Bois, W. E. B. (William Edward Burghardt)” or “W.E.B. Du Bois”? I mean, these are all options. People have used a lot of different metadata practices at different institutions and in different times. But I’m going to confuse the poor computer if I imply to it that all these photos of the same person are photos of different people. (I have gone through several attempts to resolve this computationally without needing to do everything by hand, with only modest success.)

What about “Photographs”? That appears in the list of subject labels for lots of things in my data set. “Photographs” is a person, right? I ended up pulling in an entire other ML component here — spaCy, to do some natural language processing to at least guess which lines are probably names, so I can clear the rest of them out of my way. But spaCy only has ~90% accuracy on personal names anyway and, guess what, because everything is terrible, in predictable ways, it has no idea “Kweisi Mfume” is a person.

Is a person who appears in the photo guaranteed to be a person who appears in the photo? Nope.

Is a person who appears in the metadata guaranteed to be a person who appears in the photo? Also nope! Often they’re a photographer or other creator. Sometimes they are the subject of the depicted event, but not themselves in the photo. (spaCy will happily tell you that there’s personal name content in something like “Martin Luther King Day”, but MLK is unlikely to appear in a photo of an MLK day event.)

OK but let’s imagine for the sake of argument that we live in a perfect world where the metadata is exactly what we need — no more, no less — and its formatting is perfectly consistent.

Here you are, in this perfect world, confronted with a photo that contains two people and has two names. How do you like them apples?

I spent more time than I care to admit trying to figure this out. Can I bootstrap from photos that have one person and one name — identify those, subtract them out of photos of two people, go from there? (Not reliably — there’s a lot of data I never reach that way — and it’s horribly inefficient.)

Can I do something extremely clever with matrix multiplication? Like…once I generate vector space embeddings of all the photos, can I do some sort of like dot-product thing across all of my photos, or big batches of them, and correlate the closest-match photos with overlaps in metadata? Not only is this a process which begs the question — I’d have to do that with the ML system I have not yet optimized for archival photo recognition, thus possibly just baking bad data in — but have I mentioned I have taken exactly one linear algebra class, which I didn’t really grasp, in 1995?

What if I train yet another ML system to do some kind of k-means clustering on the embeddings? This is both a promising approach and some really first-rate yak-shaving, combining all the question-begging concerns of the previous paragraph with all the crystalline clarity of black box ML.

Possibly at this point it would have been faster to tag them all by hand, but that would be admitting defeat. Also I don’t have a research assistant, which, let’s be honest, is the person who would usually be doing this actual work. I do have a 14-year-old and I am strongly considering paying her to do it for me, but to facilitate that I’d have to actually build a web interface and probably learn more about AWS, and the prospect of reading AWS documentation has a bracing way of reminding me of all of the more delightful and engaging elements of my todo list, like calling some people on the actual telephone to sort out however they’ve screwed up some health insurance billing.

Despite all of that, I did actually get all the way through the 5 steps above. I have a truly, spectacularly terrible neural net. Go me! But at a thousand-plus words, perhaps I should leave that story for next week….

2021-04-16T21:08:54+00:00 Andromeda Lucidworks: Tips for Mixed Reality in Retail https://lucidworks.com/post/tips-for-mixed-reality-in-retail/How retailers are turning to virtual reality, augmented reality, and mixed reality applications to recreate the in-store experience from anywhere.

The post Tips for Mixed Reality in Retail appeared first on Lucidworks.

2021-04-16T17:51:10+00:00 Andy Wibbels Erin White: Talk: Using light from the dumpster fire to illuminate a more just digital world https://erinrwhite.com/talk-using-light-from-the-dumpster-fire-to-illuminate-a-more-just-digital-world/This February I gave a lightning talk for the Richmond Design Group. My question: what if we use the light from the dumpster fire of 2020 to see an equitable, just digital world? How can we change our thinking to build the future web we need?

Presentation is embedded here; text of talk is below.

Hi everybody, I’m Erin. Before I get started I want to say thank you to the RVA Design Group organizers. This is hard work and some folks have been doing it for YEARS. Thank you to the organizers of this group for doing this work and for inviting me to speak.

This talk isn’t about 2020. This talk is about the future. But to understand the future, we gotta look back.

Travel with me to 1996. Twenty-five years ago!

I want to transport us back to the mindset of the early web. The fundamental idea of hyperlinks, which we now take for granted, really twisted everyone’s noodles. So much of the promise of the early web was that with broad access to publish in hypertext, the opportunities were limitless. Technologists saw the web as an equalizing space where systems of oppression that exist in the real world wouldn’t matter, and that we’d all be equal and free from prejudice. Nice idea, right?

You don’t need to’ve been around since 1996 to know that’s just not the way things have gone down.

Pictured before you are some of the early web pioneers. Notice a pattern here?

These early visions of the web, including Barlow’s declaration of independence of cyberspace, while inspiring and exciting, were crafted by the same types of folks who wrote the actual declaration of independence: the landed gentry, white men with privilege. Their vision for the web echoed the declaration of independence’s authors’ attempts to describe the world they envisioned. And what followed was the inevitable conflict with reality.

We all now hold these truths to be self-evident:

Profit first: monetization, ads, the funnel, dark patterns

Can we?: Innovation for innovation’s sake

Solutionism: code will save us

Visual design: aesthetics over usability

Lone genius: “hard” skills and rock star coders

Short term thinking: move fast, break stuff

Shipping: new features, forsaking infrastructure

Let’s move forward quickly through the past 25 years or so of the web, of digital design.

All of the web we know today has been shaped in some way by intersecting matrices of domination: colonialism, capitalism, white supremacy, patriarchy. (Thank you, bell hooks.)

The digital worlds where we spend our time – and that we build!! – exist in this way.

This is not an indictment of anyone’s individual work, so please don’t take it personally. What I’m talking about here is the digital milieu where we live our lives.

The funnel drives everything. Folks who work in nonprofits and public entities often tie ourselves in knots to retrofit our use cases in order to use common web tools (google analytics, anyone?)

In chasing innovation we often overlook important infrastructure work, and devalue work — like web accessibility, truly user-centered design, care work, documentation, customer support and even care for ourselves and our teams — that doesn’t drive the bottom line. We frequently write checks for our future selves to cash, knowing damn well that we’ll keep burying ourselves in technical debt. That’s some tough stuff for us to carry with us every day.

we often overlook important infrastructure work, and devalue work — like web accessibility, truly user-centered design, care work, documentation, customer support and even care for ourselves and our teams — that doesn’t drive the bottom line. We frequently write checks for our future selves to cash, knowing damn well that we’ll keep burying ourselves in technical debt. That’s some tough stuff for us to carry with us every day.

The “move fast” mentality has resulted in explosive growth, but at what cost? And in creating urgency where it doesn’t need to exist, focusing on new things rather than repair, the end result is that we’re building a house of cards. And we’re exhausted.

To zoom way out, this is another manifestation of late capitalism. Emphasis on LATE. Because…2020 happened.

Hard times amplify existing inequalities

Cutting corners mortgages our future

Infrastructure is essential

“Colorblind”/color-evasive policy doesn’t cut it

Inclusive design is vital

We have a duty to each other

Technology is only one piece

Together, we rise

The past year has been awful for pretty much everybody.

But what the light from this dumpster fire has illuminated is that things have actually been awful for a lot of people, for a long time. This year has shown us how perilous it is to avoid important infrastructure work and to pursue innovation over access. It’s also shown us that what is sometimes referred to as colorblindness — I use the term color-evasiveness because it is not ableist and it is more accurate — a color-evasive approach that assumes everyone’s needs are the same in fact leaves people out, especially folks who need the most support.

We’ve learned that technology is a crucial tool and that it’s just one thing that keeps us connected to each other as humans.

Finally, we’ve learned that if we work together we can actually make shit happen, despite a world that tells us individual action is meaningless. Like biscuits in a pan, when we connect, we rise together.

Marginalized folks have been saying this shit for years.

More of us than ever see these things now.

And now we can’t, and shouldn’t, unsee it.

Current state:

– Profit first

– Can we?

– Solutionism

– Aesthetics

– “Hard” skills

– Rockstar coders

– Short term thinking

– ShippingFuture state:

– People first: security, privacy, inclusion

– Should we?

– Holistic design

– Accessibility

– Soft skills

– Teams

– Long term thinking

– Sustaining

So let’s talk about the future. I told you this would be a talk about the future.

Like many of y’all I have had a very hard time this year thinking about the future at all. It’s hard to make plans. It’s hard to know what the next few weeks, months, years will look like. And who will be there to see it with us.

But sometimes, when I can think clearly about something besides just making it through every day, I wonder.

What does a people-first digital world look like? Who’s been missing this whole time?

Just because we can do something, does it mean we should?

Will technology actually solve this problem? Are we even defining the problem correctly?

What does it mean to design knowing that even “able-bodied” folks are only temporarily so? And that our products need to be used, by humans, in various contexts and emotional states?

(There are also false binaries here: aesthetics vs. accessibility; abled and disabled; binaries are dangerous!)

How can we nourish our collaborations with each other, with our teams, with our users? And focus on the wisdom of the folks in the room rather than assigning individuals as heroes?

How can we build for maintenance and repair? How do we stop writing checks our future selves to cash – with interest?

Some of this here, I am speaking of as a web user and a web creator. I’ve only ever worked in the public sector. When I talk with folks working in the private sector I always do some amount of translating. At the end of the day, we’re solving many of the same problems.

But what can private-sector workers learn from folks who come from a public-sector organization?

And, as we think about what we build online, how can we also apply that thinking to our real-life communities? What is our role in shaping the public conversation around the use of technologies? I offer a few ideas here, but don’t want them to limit your thinking.

Here’s a thread about public service.

— Dana Chisnell (she / her) (@danachis) February 5, 2021

I don’t have a ton of time left today. I wanted to talk about public service like the very excellent Dana Chisnell here.

Like I said, I’ve worked in the public sector, in higher ed, for a long time. It’s my bread and butter. It’s weird, it’s hard, it’s great.

There’s a lot of work to be done, and it ain’t happening at civic hackathons or from external contractors. The call needs to come from inside the house.

Government should be

– inclusive of all people

– responsive to needs of the people

– effective in its duties & purpose— Dana Chisnell (she / her) (@danachis) February 5, 2021

I want you to consider for a minute how many folks are working in the public sector right now, and how technical expertise — especially in-house expertise — is something that is desperately needed.

Pictured here are the old website and new website for the city of Richmond. I have a whole ‘nother talk about that new Richmond website. I FOIA’d the contracts for this website. There are 112 accessibility errors on the homepage alone. It’s been in development for 3 years and still isn’t in full production.

Bottom line, good government work matters, and it’s hard to find. Important work is put out for the lowest bidder and often external agencies don’t get it right. What would it look like to have that expertise in-house?

We also desperately need lawmakers and citizens who understand technology and ask important questions about ethics and human impact of systems decisions.

Pictured here are some headlines as well as a contract from the City of Richmond. Y’all know we spent $1.5 million on a predictive policing system that will disproportionately harm citizens of color? And that earlier this month, City Council voted to allow Richmond and VCU PD’s to start sharing their data in that system?

The surveillance state abides. Technology facilitates.

I dare say these technologies are designed to bank on the fact that lawmakers don’t know what they’re looking at.

My theory is, in addition to holding deep prejudices, lawmakers are also deeply baffled by technology. The hard questions aren’t being asked, or they’re coming too late, and they’re coming from citizens who have to put themselves in harm’s way to do so.

Technophobia is another harmful element that’s emerged in the past decades. What would a world look like where technology is not a thing to shrug off as un-understandable, but is instead deftly co-designed to meet our needs, rather than licensed to our city for 1.5 million dollars? What if everyone knew that technology is not neutral?

This is some of the future I can see. I hope that it’s sparked new thoughts for you.

Let’s envision a future together. What has the light illuminated for you?

Thank you!